19. 🛠️ Exercise - Model Training#

In Colab, be sure to select ‘T4 GPU’ under ‘Edit’->’Notebook Settings’->’Hardware accelerator’ section. Or under Connect > Change runtime

19.1. Workshop Sample Dataset#

# Install Ultralytics YOLO

!pip install ultralytics

# Setup Paths

# Google Cloud Storage URLs

dataset_url = "https://storage.googleapis.com/nmfs_odp_pifsc/PIFSC/ESD/ARP/pifsc-ai-data-repository/fish-detection/workshop/fish_dataset.zip"

# Local paths for dataset & model

dataset_zip_path = "/content/fish_dataset.zip"

dataset_extract_path = "/content/fish_dataset/"

# Download Training Dataset

import os

import requests

import zipfile

from tqdm import tqdm

def download_file(url, output_path):

response = requests.get(url, stream=True)

total_size = int(response.headers.get("content-length", 0))

with open(output_path, "wb") as file, tqdm(

desc=f"Downloading {os.path.basename(output_path)}",

total=total_size,

unit="B",

unit_scale=True,

unit_divisor=1024,

) as bar:

for data in response.iter_content(chunk_size=1024):

file.write(data)

bar.update(len(data))

# Download dataset if it doesn't exist

if not os.path.exists(dataset_zip_path):

download_file(dataset_url, dataset_zip_path)

else:

print("✔ Dataset already downloaded.")

# Extract dataset

if not os.path.exists(dataset_extract_path):

with zipfile.ZipFile(dataset_zip_path, "r") as zip_ref:

zip_ref.extractall(dataset_extract_path)

print(f"✔ Extracted dataset to {dataset_extract_path}")

else:

print("✔ Dataset already extracted.")

Downloading fish_dataset.zip: 100%|██████████| 39.5M/39.5M [00:02<00:00, 14.1MB/s]

✔ Extracted dataset to /content/fish_dataset/

20. Simple Training Script#

import os

import torch

from ultralytics import YOLO

# Determine the device to use

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(f"Using device: {device}")

# Set paths

base_path = "/content/fish_dataset/"

yaml_file_path = os.path.join(base_path, 'dataset.yaml')

# Load the smaller YOLO11 model

small_model = YOLO("yolo11n.pt")

# Move the model to the correct device

small_model.model.to(device)

# Training hyperparameters

small_model.train(

data=yaml_file_path,

epochs=5,

imgsz=640,

batch=16,

device=device,

project='training_logs', #logging directory

)

print("Training complete!")

# Save the trained model

trained_model_path = os.path.join(base_path, "yolo11n_fish_trained_v1.pt")

small_model.save(trained_model_path)

print(f"Trained model saved to {trained_model_path}")

# Save the model weights separately for further use

weights_path = os.path.join(base_path, "yolo11n_fish_weights_v1.pth")

torch.save(small_model.model.state_dict(), weights_path)

print(f"Weights saved to {weights_path}")

Creating new Ultralytics Settings v0.0.6 file ✅

View Ultralytics Settings with 'yolo settings' or at '/root/.config/Ultralytics/settings.json'

Update Settings with 'yolo settings key=value', i.e. 'yolo settings runs_dir=path/to/dir'. For help see https://docs.ultralytics.com/quickstart/#ultralytics-settings.

Using device: cuda

Downloading https://github.com/ultralytics/assets/releases/download/v8.3.0/yolo11n.pt to 'yolo11n.pt'...

100%|██████████| 5.35M/5.35M [00:00<00:00, 313MB/s]

Ultralytics 8.3.83 🚀 Python-3.11.11 torch-2.5.1+cu124 CUDA:0 (Tesla T4, 15095MiB)

engine/trainer: task=detect, mode=train, model=yolo11n.pt, data=/content/fish_dataset/dataset.yaml, epochs=5, time=None, patience=100, batch=16, imgsz=640, save=True, save_period=-1, cache=False, device=cuda, workers=8, project=training_logs, name=train, exist_ok=False, pretrained=True, optimizer=auto, verbose=True, seed=0, deterministic=True, single_cls=False, rect=False, cos_lr=False, close_mosaic=10, resume=False, amp=True, fraction=1.0, profile=False, freeze=None, multi_scale=False, overlap_mask=True, mask_ratio=4, dropout=0.0, val=True, split=val, save_json=False, save_hybrid=False, conf=None, iou=0.7, max_det=300, half=False, dnn=False, plots=True, source=None, vid_stride=1, stream_buffer=False, visualize=False, augment=False, agnostic_nms=False, classes=None, retina_masks=False, embed=None, show=False, save_frames=False, save_txt=False, save_conf=False, save_crop=False, show_labels=True, show_conf=True, show_boxes=True, line_width=None, format=torchscript, keras=False, optimize=False, int8=False, dynamic=False, simplify=True, opset=None, workspace=None, nms=False, lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, dfl=1.5, pose=12.0, kobj=1.0, nbs=64, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, bgr=0.0, mosaic=1.0, mixup=0.0, copy_paste=0.0, copy_paste_mode=flip, auto_augment=randaugment, erasing=0.4, crop_fraction=1.0, cfg=None, tracker=botsort.yaml, save_dir=training_logs/train

Downloading https://ultralytics.com/assets/Arial.ttf to '/root/.config/Ultralytics/Arial.ttf'...

100%|██████████| 755k/755k [00:00<00:00, 121MB/s]

Overriding model.yaml nc=80 with nc=1

from n params module arguments

0 -1 1 464 ultralytics.nn.modules.conv.Conv [3, 16, 3, 2]

1 -1 1 4672 ultralytics.nn.modules.conv.Conv [16, 32, 3, 2]

2 -1 1 6640 ultralytics.nn.modules.block.C3k2 [32, 64, 1, False, 0.25]

3 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]

4 -1 1 26080 ultralytics.nn.modules.block.C3k2 [64, 128, 1, False, 0.25]

5 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

6 -1 1 87040 ultralytics.nn.modules.block.C3k2 [128, 128, 1, True]

7 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]

8 -1 1 346112 ultralytics.nn.modules.block.C3k2 [256, 256, 1, True]

9 -1 1 164608 ultralytics.nn.modules.block.SPPF [256, 256, 5]

10 -1 1 249728 ultralytics.nn.modules.block.C2PSA [256, 256, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]

13 -1 1 111296 ultralytics.nn.modules.block.C3k2 [384, 128, 1, False]

14 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

15 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]

16 -1 1 32096 ultralytics.nn.modules.block.C3k2 [256, 64, 1, False]

17 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]

18 [-1, 13] 1 0 ultralytics.nn.modules.conv.Concat [1]

19 -1 1 86720 ultralytics.nn.modules.block.C3k2 [192, 128, 1, False]

20 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

21 [-1, 10] 1 0 ultralytics.nn.modules.conv.Concat [1]

22 -1 1 378880 ultralytics.nn.modules.block.C3k2 [384, 256, 1, True]

23 [16, 19, 22] 1 430867 ultralytics.nn.modules.head.Detect [1, [64, 128, 256]]

YOLO11n summary: 181 layers, 2,590,035 parameters, 2,590,019 gradients, 6.4 GFLOPs

Transferred 448/499 items from pretrained weights

TensorBoard: Start with 'tensorboard --logdir training_logs/train', view at http://localhost:6006/

Freezing layer 'model.23.dfl.conv.weight'

AMP: running Automatic Mixed Precision (AMP) checks...

AMP: checks passed ✅

train: Scanning /content/fish_dataset/labels/train... 786 images, 77 backgrounds, 1 corrupt: 100%|██████████| 786/786 [00:00<00:00, 2446.68it/s]

train: WARNING ⚠️ /content/fish_dataset/images/train/20161014.193730.503.011459.jpg: ignoring corrupt image/label: non-normalized or out of bounds coordinates [ 1.1097]

train: New cache created: /content/fish_dataset/labels/train.cache

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01, num_output_channels=3, method='weighted_average'), CLAHE(p=0.01, clip_limit=(1.0, 4.0), tile_grid_size=(8, 8))

/usr/local/lib/python3.11/dist-packages/albumentations/__init__.py:28: UserWarning: A new version of Albumentations is available: '2.0.5' (you have '2.0.4'). Upgrade using: pip install -U albumentations. To disable automatic update checks, set the environment variable NO_ALBUMENTATIONS_UPDATE to 1.

check_for_updates()

val: Scanning /content/fish_dataset/labels/val... 196 images, 19 backgrounds, 0 corrupt: 100%|██████████| 196/196 [00:00<00:00, 1378.59it/s]

val: New cache created: /content/fish_dataset/labels/val.cache

Plotting labels to training_logs/train/labels.jpg...

optimizer: 'optimizer=auto' found, ignoring 'lr0=0.01' and 'momentum=0.937' and determining best 'optimizer', 'lr0' and 'momentum' automatically...

optimizer: AdamW(lr=0.002, momentum=0.9) with parameter groups 81 weight(decay=0.0), 88 weight(decay=0.0005), 87 bias(decay=0.0)

TensorBoard: model graph visualization added ✅

Image sizes 640 train, 640 val

Using 2 dataloader workers

Logging results to training_logs/train

Starting training for 5 epochs...

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

1/5 2.34G 1.409 2.513 1.331 0 640: 100%|██████████| 50/50 [00:17<00:00, 2.87it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:02<00:00, 2.42it/s]

all 196 457 0.953 0.267 0.649 0.331

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

2/5 2.24G 1.399 1.561 1.33 3 640: 100%|██████████| 50/50 [00:17<00:00, 2.88it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 4.33it/s]

all 196 457 0.72 0.468 0.556 0.288

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

3/5 2.25G 1.408 1.392 1.319 9 640: 100%|██████████| 50/50 [00:15<00:00, 3.32it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 4.04it/s]

all 196 457 0.821 0.681 0.758 0.44

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

4/5 2.24G 1.352 1.289 1.294 1 640: 100%|██████████| 50/50 [00:15<00:00, 3.19it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:02<00:00, 2.64it/s]

all 196 457 0.854 0.796 0.877 0.536

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

5/5 2.23G 1.236 1.132 1.23 10 640: 100%|██████████| 50/50 [00:14<00:00, 3.37it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:01<00:00, 3.97it/s]

all 196 457 0.891 0.844 0.927 0.6

5 epochs completed in 0.028 hours.

Optimizer stripped from training_logs/train/weights/last.pt, 5.5MB

Optimizer stripped from training_logs/train/weights/best.pt, 5.5MB

Validating training_logs/train/weights/best.pt...

Ultralytics 8.3.83 🚀 Python-3.11.11 torch-2.5.1+cu124 CUDA:0 (Tesla T4, 15095MiB)

YOLO11n summary (fused): 100 layers, 2,582,347 parameters, 0 gradients, 6.3 GFLOPs

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 7/7 [00:03<00:00, 1.86it/s]

all 196 457 0.891 0.844 0.927 0.601

Speed: 0.4ms preprocess, 2.8ms inference, 0.0ms loss, 4.3ms postprocess per image

Results saved to training_logs/train

Training complete!

Trained model saved to /content/fish_dataset/yolo11n_fish_trained_v1.pt

Weights saved to /content/fish_dataset/yolo11n_fish_weights_v1.pth

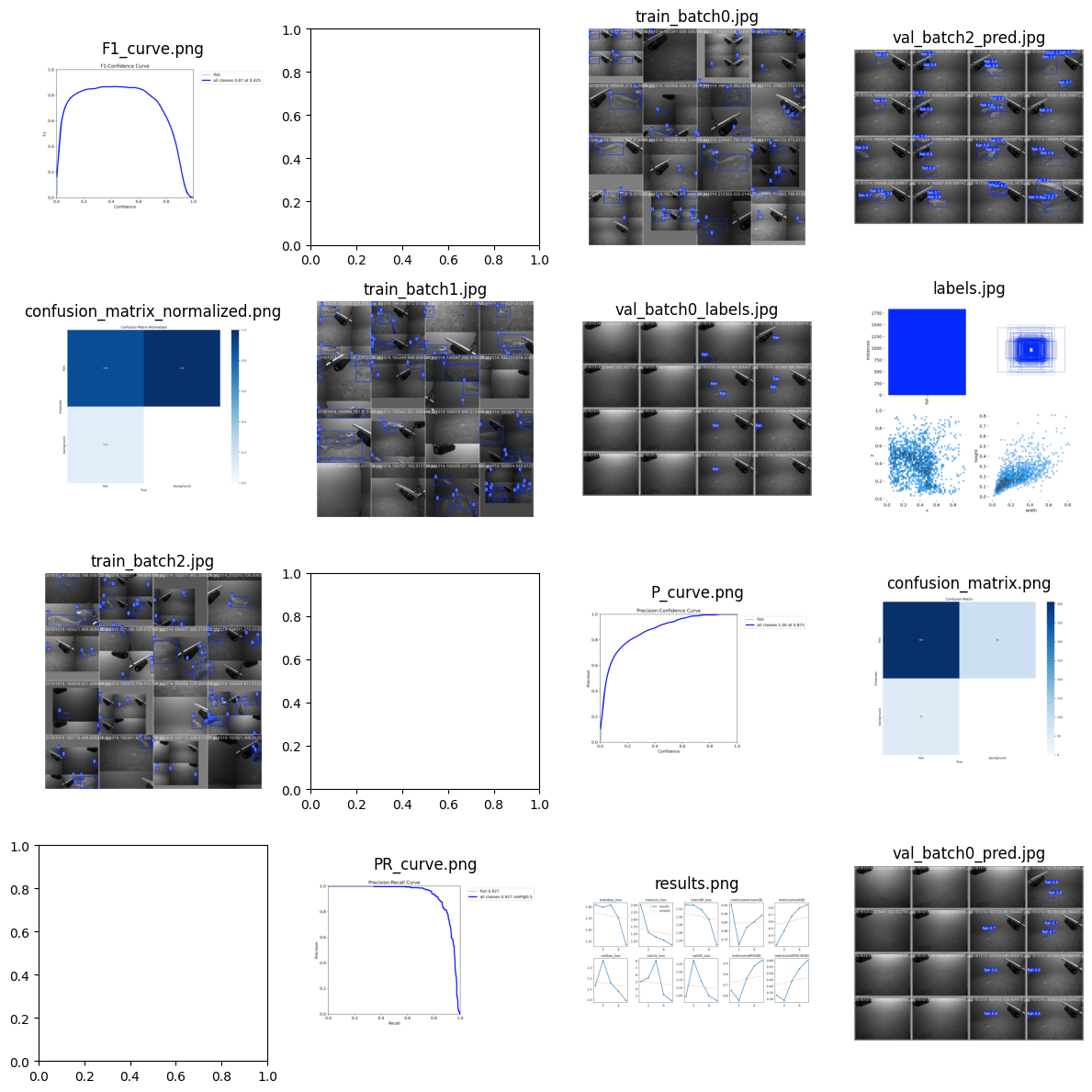

21. View Training Logs#

import os

import matplotlib.pyplot as plt

from glob import glob

from PIL import Image # Use PIL for better handling of images

# Path to training images

image_folder = "/content/training_logs/train"

# Get all image file paths (adjust extensions if needed)

image_paths = glob(os.path.join(image_folder, "*.*")) # Matches all image types

# Check if images exist

if not image_paths:

print("❌ No images found! Check the folder path and try again.")

else:

print(f"✅ Found {len(image_paths)} images.")

# Limit number of images displayed (max 16 for readability)

num_images = min(16, len(image_paths))

cols = 4 # Number of columns in the grid

rows = (num_images // cols) + (num_images % cols > 0)

# Create a plot grid

fig, axes = plt.subplots(rows, cols, figsize=(12, 12))

# Display images

for ax, img_path in zip(axes.ravel(), image_paths[:num_images]):

try:

img = Image.open(img_path) # Open image with PIL

ax.imshow(img)

ax.set_title(os.path.basename(img_path))

ax.axis("off")

except Exception as e:

print(f"⚠️ Skipping: {img_path} ({e})")

plt.tight_layout()

plt.show()

✅ Found 21 images.

⚠️ Skipping: /content/training_logs/train/results.csv (cannot identify image file '/content/training_logs/train/results.csv')

⚠️ Skipping: /content/training_logs/train/events.out.tfevents.1741117959.cc3d29d77482.1852.0 (cannot identify image file '/content/training_logs/train/events.out.tfevents.1741117959.cc3d29d77482.1852.0')

⚠️ Skipping: /content/training_logs/train/args.yaml (cannot identify image file '/content/training_logs/train/args.yaml')

22. Explanation of Training Results#

22.1. 1. Training Loss Metrics#

Each epoch reports three loss values:

Box Loss: Measures the error in bounding box predictions.

Cls Loss: Classification loss, measuring the accuracy of class assignments.

DFL Loss: Distribution Focal Loss, related to precise bounding box localization.

22.1.1. What to look for?#

Loss values should generally decrease over epochs, indicating improved learning.

Your results show a steady decrease in all three losses, which suggests successful model training.

22.2. 2. Validation Metrics#

These metrics evaluate model performance on a validation set after each epoch:

Precision (P): How many predicted objects are correct? (Higher is better)

Recall (R): How many actual objects were detected? (Higher is better)

mAP50: Mean Average Precision at IoU 0.5 (a key detection accuracy metric).

mAP50-95: Mean Average Precision across IoU thresholds (more strict than mAP50).

22.2.1. What to look for?#

Your mAP50 increased from 0.649 (Epoch 1) to 0.927 (Epoch 5), showing strong improvement.

Precision and Recall improved, meaning the model is both detecting more objects and making fewer false positives.

mAP50-95 also increased (0.331 → 0.6), showing improved performance under stricter evaluation.

22.3. 3. Observations from Epoch Progress#

Epoch 1: Low recall (0.267), meaning many objects were missed.

Epoch 2: Precision dropped, recall improved; suggests the model is learning but still misclassifying.

Epoch 3-4: Significant improvement in recall and mAP, meaning the model is becoming more accurate.

Epoch 5: Best performance with high precision (0.891), recall (0.844), and mAP50 (0.927).

23. Final Takeaways#

The model is learning well: Losses decrease, and detection accuracy improves significantly.

Epoch 5 is the best-performing epoch: High precision, recall, and mAP.

Potential next steps: If training longer, monitor overfitting (gap between train & val metrics). Consider fine-tuning if the performance levels off.

24. Download Model and Logs#

import shutil

# Zip the training logs directory

shutil.make_archive("/content/training_logs", 'zip', "/content/training_logs")

# Zip the trained model

shutil.make_archive("/content/trained_model", 'zip', "/content/fish_dataset/")

from google.colab import files

# Download the zipped logs

files.download("/content/training_logs.zip")

# Download the zipped model

files.download("/content/trained_model.zip")

25. Print Metrics#

# Evaluate model performance

metrics = small_model.val(data=yaml_file_path, device=device)

print(metrics)

Ultralytics 8.3.83 🚀 Python-3.11.11 torch-2.5.1+cu124 CUDA:0 (Tesla T4, 15095MiB)

YOLO11n summary (fused): 100 layers, 2,582,347 parameters, 0 gradients, 6.3 GFLOPs

val: Scanning /content/fish_dataset/labels/val.cache... 196 images, 19 backgrounds, 0 corrupt: 100%|██████████| 196/196 [00:00<?, ?it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 13/13 [00:03<00:00, 3.85it/s]

all 196 457 0.891 0.844 0.927 0.601

Speed: 2.3ms preprocess, 5.3ms inference, 0.0ms loss, 1.9ms postprocess per image

Results saved to training_logs/train2

ultralytics.utils.metrics.DetMetrics object with attributes:

ap_class_index: array([0])

box: ultralytics.utils.metrics.Metric object

confusion_matrix: <ultralytics.utils.metrics.ConfusionMatrix object at 0x7dd6474960d0>

curves: ['Precision-Recall(B)', 'F1-Confidence(B)', 'Precision-Confidence(B)', 'Recall-Confidence(B)']

curves_results: [[array([ 0, 0.001001, 0.002002, 0.003003, 0.004004, 0.005005, 0.006006, 0.007007, 0.008008, 0.009009, 0.01001, 0.011011, 0.012012, 0.013013, 0.014014, 0.015015, 0.016016, 0.017017, 0.018018, 0.019019, 0.02002, 0.021021, 0.022022, 0.023023,

0.024024, 0.025025, 0.026026, 0.027027, 0.028028, 0.029029, 0.03003, 0.031031, 0.032032, 0.033033, 0.034034, 0.035035, 0.036036, 0.037037, 0.038038, 0.039039, 0.04004, 0.041041, 0.042042, 0.043043, 0.044044, 0.045045, 0.046046, 0.047047,

0.048048, 0.049049, 0.05005, 0.051051, 0.052052, 0.053053, 0.054054, 0.055055, 0.056056, 0.057057, 0.058058, 0.059059, 0.06006, 0.061061, 0.062062, 0.063063, 0.064064, 0.065065, 0.066066, 0.067067, 0.068068, 0.069069, 0.07007, 0.071071,

0.072072, 0.073073, 0.074074, 0.075075, 0.076076, 0.077077, 0.078078, 0.079079, 0.08008, 0.081081, 0.082082, 0.083083, 0.084084, 0.085085, 0.086086, 0.087087, 0.088088, 0.089089, 0.09009, 0.091091, 0.092092, 0.093093, 0.094094, 0.095095,

0.096096, 0.097097, 0.098098, 0.099099, 0.1001, 0.1011, 0.1021, 0.1031, 0.1041, 0.10511, 0.10611, 0.10711, 0.10811, 0.10911, 0.11011, 0.11111, 0.11211, 0.11311, 0.11411, 0.11512, 0.11612, 0.11712, 0.11812, 0.11912,

0.12012, 0.12112, 0.12212, 0.12312, 0.12412, 0.12513, 0.12613, 0.12713, 0.12813, 0.12913, 0.13013, 0.13113, 0.13213, 0.13313, 0.13413, 0.13514, 0.13614, 0.13714, 0.13814, 0.13914, 0.14014, 0.14114, 0.14214, 0.14314,

0.14414, 0.14515, 0.14615, 0.14715, 0.14815, 0.14915, 0.15015, 0.15115, 0.15215, 0.15315, 0.15415, 0.15516, 0.15616, 0.15716, 0.15816, 0.15916, 0.16016, 0.16116, 0.16216, 0.16316, 0.16416, 0.16517, 0.16617, 0.16717,

0.16817, 0.16917, 0.17017, 0.17117, 0.17217, 0.17317, 0.17417, 0.17518, 0.17618, 0.17718, 0.17818, 0.17918, 0.18018, 0.18118, 0.18218, 0.18318, 0.18418, 0.18519, 0.18619, 0.18719, 0.18819, 0.18919, 0.19019, 0.19119,

0.19219, 0.19319, 0.19419, 0.1952, 0.1962, 0.1972, 0.1982, 0.1992, 0.2002, 0.2012, 0.2022, 0.2032, 0.2042, 0.20521, 0.20621, 0.20721, 0.20821, 0.20921, 0.21021, 0.21121, 0.21221, 0.21321, 0.21421, 0.21522,

0.21622, 0.21722, 0.21822, 0.21922, 0.22022, 0.22122, 0.22222, 0.22322, 0.22422, 0.22523, 0.22623, 0.22723, 0.22823, 0.22923, 0.23023, 0.23123, 0.23223, 0.23323, 0.23423, 0.23524, 0.23624, 0.23724, 0.23824, 0.23924,

0.24024, 0.24124, 0.24224, 0.24324, 0.24424, 0.24525, 0.24625, 0.24725, 0.24825, 0.24925, 0.25025, 0.25125, 0.25225, 0.25325, 0.25425, 0.25526, 0.25626, 0.25726, 0.25826, 0.25926, 0.26026, 0.26126, 0.26226, 0.26326,

0.26426, 0.26527, 0.26627, 0.26727, 0.26827, 0.26927, 0.27027, 0.27127, 0.27227, 0.27327, 0.27427, 0.27528, 0.27628, 0.27728, 0.27828, 0.27928, 0.28028, 0.28128, 0.28228, 0.28328, 0.28428, 0.28529, 0.28629, 0.28729,

0.28829, 0.28929, 0.29029, 0.29129, 0.29229, 0.29329, 0.29429, 0.2953, 0.2963, 0.2973, 0.2983, 0.2993, 0.3003, 0.3013, 0.3023, 0.3033, 0.3043, 0.30531, 0.30631, 0.30731, 0.30831, 0.30931, 0.31031, 0.31131,

0.31231, 0.31331, 0.31431, 0.31532, 0.31632, 0.31732, 0.31832, 0.31932, 0.32032, 0.32132, 0.32232, 0.32332, 0.32432, 0.32533, 0.32633, 0.32733, 0.32833, 0.32933, 0.33033, 0.33133, 0.33233, 0.33333, 0.33433, 0.33534,

0.33634, 0.33734, 0.33834, 0.33934, 0.34034, 0.34134, 0.34234, 0.34334, 0.34434, 0.34535, 0.34635, 0.34735, 0.34835, 0.34935, 0.35035, 0.35135, 0.35235, 0.35335, 0.35435, 0.35536, 0.35636, 0.35736, 0.35836, 0.35936,

0.36036, 0.36136, 0.36236, 0.36336, 0.36436, 0.36537, 0.36637, 0.36737, 0.36837, 0.36937, 0.37037, 0.37137, 0.37237, 0.37337, 0.37437, 0.37538, 0.37638, 0.37738, 0.37838, 0.37938, 0.38038, 0.38138, 0.38238, 0.38338,

0.38438, 0.38539, 0.38639, 0.38739, 0.38839, 0.38939, 0.39039, 0.39139, 0.39239, 0.39339, 0.39439, 0.3954, 0.3964, 0.3974, 0.3984, 0.3994, 0.4004, 0.4014, 0.4024, 0.4034, 0.4044, 0.40541, 0.40641, 0.40741,

0.40841, 0.40941, 0.41041, 0.41141, 0.41241, 0.41341, 0.41441, 0.41542, 0.41642, 0.41742, 0.41842, 0.41942, 0.42042, 0.42142, 0.42242, 0.42342, 0.42442, 0.42543, 0.42643, 0.42743, 0.42843, 0.42943, 0.43043, 0.43143,

0.43243, 0.43343, 0.43443, 0.43544, 0.43644, 0.43744, 0.43844, 0.43944, 0.44044, 0.44144, 0.44244, 0.44344, 0.44444, 0.44545, 0.44645, 0.44745, 0.44845, 0.44945, 0.45045, 0.45145, 0.45245, 0.45345, 0.45445, 0.45546,

0.45646, 0.45746, 0.45846, 0.45946, 0.46046, 0.46146, 0.46246, 0.46346, 0.46446, 0.46547, 0.46647, 0.46747, 0.46847, 0.46947, 0.47047, 0.47147, 0.47247, 0.47347, 0.47447, 0.47548, 0.47648, 0.47748, 0.47848, 0.47948,

0.48048, 0.48148, 0.48248, 0.48348, 0.48448, 0.48549, 0.48649, 0.48749, 0.48849, 0.48949, 0.49049, 0.49149, 0.49249, 0.49349, 0.49449, 0.4955, 0.4965, 0.4975, 0.4985, 0.4995, 0.5005, 0.5015, 0.5025, 0.5035,

0.5045, 0.50551, 0.50651, 0.50751, 0.50851, 0.50951, 0.51051, 0.51151, 0.51251, 0.51351, 0.51451, 0.51552, 0.51652, 0.51752, 0.51852, 0.51952, 0.52052, 0.52152, 0.52252, 0.52352, 0.52452, 0.52553, 0.52653, 0.52753,

0.52853, 0.52953, 0.53053, 0.53153, 0.53253, 0.53353, 0.53453, 0.53554, 0.53654, 0.53754, 0.53854, 0.53954, 0.54054, 0.54154, 0.54254, 0.54354, 0.54454, 0.54555, 0.54655, 0.54755, 0.54855, 0.54955, 0.55055, 0.55155,

0.55255, 0.55355, 0.55455, 0.55556, 0.55656, 0.55756, 0.55856, 0.55956, 0.56056, 0.56156, 0.56256, 0.56356, 0.56456, 0.56557, 0.56657, 0.56757, 0.56857, 0.56957, 0.57057, 0.57157, 0.57257, 0.57357, 0.57457, 0.57558,

0.57658, 0.57758, 0.57858, 0.57958, 0.58058, 0.58158, 0.58258, 0.58358, 0.58458, 0.58559, 0.58659, 0.58759, 0.58859, 0.58959, 0.59059, 0.59159, 0.59259, 0.59359, 0.59459, 0.5956, 0.5966, 0.5976, 0.5986, 0.5996,

0.6006, 0.6016, 0.6026, 0.6036, 0.6046, 0.60561, 0.60661, 0.60761, 0.60861, 0.60961, 0.61061, 0.61161, 0.61261, 0.61361, 0.61461, 0.61562, 0.61662, 0.61762, 0.61862, 0.61962, 0.62062, 0.62162, 0.62262, 0.62362,

0.62462, 0.62563, 0.62663, 0.62763, 0.62863, 0.62963, 0.63063, 0.63163, 0.63263, 0.63363, 0.63463, 0.63564, 0.63664, 0.63764, 0.63864, 0.63964, 0.64064, 0.64164, 0.64264, 0.64364, 0.64464, 0.64565, 0.64665, 0.64765,

0.64865, 0.64965, 0.65065, 0.65165, 0.65265, 0.65365, 0.65465, 0.65566, 0.65666, 0.65766, 0.65866, 0.65966, 0.66066, 0.66166, 0.66266, 0.66366, 0.66466, 0.66567, 0.66667, 0.66767, 0.66867, 0.66967, 0.67067, 0.67167,

0.67267, 0.67367, 0.67467, 0.67568, 0.67668, 0.67768, 0.67868, 0.67968, 0.68068, 0.68168, 0.68268, 0.68368, 0.68468, 0.68569, 0.68669, 0.68769, 0.68869, 0.68969, 0.69069, 0.69169, 0.69269, 0.69369, 0.69469, 0.6957,

0.6967, 0.6977, 0.6987, 0.6997, 0.7007, 0.7017, 0.7027, 0.7037, 0.7047, 0.70571, 0.70671, 0.70771, 0.70871, 0.70971, 0.71071, 0.71171, 0.71271, 0.71371, 0.71471, 0.71572, 0.71672, 0.71772, 0.71872, 0.71972,

0.72072, 0.72172, 0.72272, 0.72372, 0.72472, 0.72573, 0.72673, 0.72773, 0.72873, 0.72973, 0.73073, 0.73173, 0.73273, 0.73373, 0.73473, 0.73574, 0.73674, 0.73774, 0.73874, 0.73974, 0.74074, 0.74174, 0.74274, 0.74374,

0.74474, 0.74575, 0.74675, 0.74775, 0.74875, 0.74975, 0.75075, 0.75175, 0.75275, 0.75375, 0.75475, 0.75576, 0.75676, 0.75776, 0.75876, 0.75976, 0.76076, 0.76176, 0.76276, 0.76376, 0.76476, 0.76577, 0.76677, 0.76777,

0.76877, 0.76977, 0.77077, 0.77177, 0.77277, 0.77377, 0.77477, 0.77578, 0.77678, 0.77778, 0.77878, 0.77978, 0.78078, 0.78178, 0.78278, 0.78378, 0.78478, 0.78579, 0.78679, 0.78779, 0.78879, 0.78979, 0.79079, 0.79179,

0.79279, 0.79379, 0.79479, 0.7958, 0.7968, 0.7978, 0.7988, 0.7998, 0.8008, 0.8018, 0.8028, 0.8038, 0.8048, 0.80581, 0.80681, 0.80781, 0.80881, 0.80981, 0.81081, 0.81181, 0.81281, 0.81381, 0.81481, 0.81582,

0.81682, 0.81782, 0.81882, 0.81982, 0.82082, 0.82182, 0.82282, 0.82382, 0.82482, 0.82583, 0.82683, 0.82783, 0.82883, 0.82983, 0.83083, 0.83183, 0.83283, 0.83383, 0.83483, 0.83584, 0.83684, 0.83784, 0.83884, 0.83984,

0.84084, 0.84184, 0.84284, 0.84384, 0.84484, 0.84585, 0.84685, 0.84785, 0.84885, 0.84985, 0.85085, 0.85185, 0.85285, 0.85385, 0.85485, 0.85586, 0.85686, 0.85786, 0.85886, 0.85986, 0.86086, 0.86186, 0.86286, 0.86386,

0.86486, 0.86587, 0.86687, 0.86787, 0.86887, 0.86987, 0.87087, 0.87187, 0.87287, 0.87387, 0.87487, 0.87588, 0.87688, 0.87788, 0.87888, 0.87988, 0.88088, 0.88188, 0.88288, 0.88388, 0.88488, 0.88589, 0.88689, 0.88789,

0.88889, 0.88989, 0.89089, 0.89189, 0.89289, 0.89389, 0.89489, 0.8959, 0.8969, 0.8979, 0.8989, 0.8999, 0.9009, 0.9019, 0.9029, 0.9039, 0.9049, 0.90591, 0.90691, 0.90791, 0.90891, 0.90991, 0.91091, 0.91191,

0.91291, 0.91391, 0.91491, 0.91592, 0.91692, 0.91792, 0.91892, 0.91992, 0.92092, 0.92192, 0.92292, 0.92392, 0.92492, 0.92593, 0.92693, 0.92793, 0.92893, 0.92993, 0.93093, 0.93193, 0.93293, 0.93393, 0.93493, 0.93594,

0.93694, 0.93794, 0.93894, 0.93994, 0.94094, 0.94194, 0.94294, 0.94394, 0.94494, 0.94595, 0.94695, 0.94795, 0.94895, 0.94995, 0.95095, 0.95195, 0.95295, 0.95395, 0.95495, 0.95596, 0.95696, 0.95796, 0.95896, 0.95996,

0.96096, 0.96196, 0.96296, 0.96396, 0.96496, 0.96597, 0.96697, 0.96797, 0.96897, 0.96997, 0.97097, 0.97197, 0.97297, 0.97397, 0.97497, 0.97598, 0.97698, 0.97798, 0.97898, 0.97998, 0.98098, 0.98198, 0.98298, 0.98398,

0.98498, 0.98599, 0.98699, 0.98799, 0.98899, 0.98999, 0.99099, 0.99199, 0.99299, 0.99399, 0.99499, 0.996, 0.997, 0.998, 0.999, 1]), array([[ 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628,

0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99628, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303,

0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303, 0.99303,

0.99303, 0.99303, 0.99303, 0.98962, 0.98962, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742,

0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742,

0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98742, 0.98498, 0.98498, 0.98498,

0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98498,

0.98498, 0.98498, 0.98498, 0.98498, 0.98498, 0.98214, 0.98214, 0.98214, 0.98214, 0.97935, 0.97935, 0.97935, 0.97935, 0.97681, 0.97681, 0.97681, 0.97681, 0.97681, 0.97681, 0.97681, 0.97681, 0.97681, 0.97681,

0.97681, 0.97414, 0.97414, 0.97414, 0.97414, 0.97414, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.97191, 0.96685, 0.96685,

0.96685, 0.96685, 0.96685, 0.96685, 0.96685, 0.96685, 0.96685, 0.96448, 0.96448, 0.96448, 0.96448, 0.96448, 0.96448, 0.96226, 0.96226, 0.96226, 0.96226, 0.96226, 0.96226, 0.96226, 0.96226, 0.96226, 0.95979,

0.95979, 0.94723, 0.94723, 0.94241, 0.94241, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93862, 0.93199, 0.93199,

0.93199, 0.93199, 0.93199, 0.93199, 0.92556, 0.92556, 0.92556, 0.92556, 0.92556, 0.92556, 0.92556, 0.91912, 0.91912, 0.91912, 0.91912, 0.91707, 0.91707, 0.91566, 0.91566, 0.91566, 0.91566, 0.91566, 0.91566,

0.91566, 0.91566, 0.91566, 0.91148, 0.91148, 0.89696, 0.89696, 0.89696, 0.89696, 0.89696, 0.89302, 0.89302, 0.89145, 0.89145, 0.89145, 0.89145, 0.8861, 0.8861, 0.8861, 0.8861, 0.8861, 0.8861, 0.8861,

0.88063, 0.88063, 0.88063, 0.88063, 0.87892, 0.87892, 0.87723, 0.87723, 0.87723, 0.87556, 0.87556, 0.86813, 0.86813, 0.859, 0.859, 0.83613, 0.83613, 0.83613, 0.83613, 0.83613, 0.82377, 0.82377, 0.82377,

0.82377, 0.82377, 0.82377, 0.82377, 0.82377, 0.82245, 0.82245, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.81151, 0.8055, 0.8055,

0.79383, 0.79383, 0.79383, 0.79383, 0.77925, 0.77925, 0.77861, 0.77861, 0.77861, 0.77861, 0.77861, 0.7619, 0.7619, 0.73982, 0.73982, 0.73982, 0.73982, 0.73509, 0.73509, 0.71795, 0.71795, 0.71795, 0.70167,

0.70167, 0.69752, 0.69752, 0.68558, 0.68558, 0.68277, 0.68277, 0.66614, 0.66614, 0.66614, 0.63111, 0.63111, 0.59888, 0.59888, 0.59444, 0.59444, 0.59336, 0.59336, 0.58824, 0.58824, 0.58165, 0.58165, 0.58165,

0.57371, 0.57371, 0.55727, 0.55727, 0.55146, 0.55146, 0.5344, 0.5344, 0.52977, 0.52977, 0.52977, 0.48991, 0.48991, 0.45816, 0.45816, 0.42997, 0.42997, 0.37801, 0.37801, 0.36873, 0.36873, 0.36873, 0.33844,

0.33844, 0.3153, 0.3153, 0.28831, 0.28831, 0.24902, 0.24902, 0.14163, 0.14163, 0.14123, 0.14123, 0.14123, 0.11633, 0.11633, 0.10528, 0.10528, 0.067084, 0.067084, 0.041138, 0.041138, 0.02542, 0.02542, 0.02542,

0.024649, 0.024649, 0.01698, 0.01698, 0.0092133, 0.0092133, 0.0070797, 0.0053098, 0.0035398, 0.0017699, 0]]), 'Recall', 'Precision'], [array([ 0, 0.001001, 0.002002, 0.003003, 0.004004, 0.005005, 0.006006, 0.007007, 0.008008, 0.009009, 0.01001, 0.011011, 0.012012, 0.013013, 0.014014, 0.015015, 0.016016, 0.017017, 0.018018, 0.019019, 0.02002, 0.021021, 0.022022, 0.023023,

0.024024, 0.025025, 0.026026, 0.027027, 0.028028, 0.029029, 0.03003, 0.031031, 0.032032, 0.033033, 0.034034, 0.035035, 0.036036, 0.037037, 0.038038, 0.039039, 0.04004, 0.041041, 0.042042, 0.043043, 0.044044, 0.045045, 0.046046, 0.047047,

0.048048, 0.049049, 0.05005, 0.051051, 0.052052, 0.053053, 0.054054, 0.055055, 0.056056, 0.057057, 0.058058, 0.059059, 0.06006, 0.061061, 0.062062, 0.063063, 0.064064, 0.065065, 0.066066, 0.067067, 0.068068, 0.069069, 0.07007, 0.071071,

0.072072, 0.073073, 0.074074, 0.075075, 0.076076, 0.077077, 0.078078, 0.079079, 0.08008, 0.081081, 0.082082, 0.083083, 0.084084, 0.085085, 0.086086, 0.087087, 0.088088, 0.089089, 0.09009, 0.091091, 0.092092, 0.093093, 0.094094, 0.095095,

0.096096, 0.097097, 0.098098, 0.099099, 0.1001, 0.1011, 0.1021, 0.1031, 0.1041, 0.10511, 0.10611, 0.10711, 0.10811, 0.10911, 0.11011, 0.11111, 0.11211, 0.11311, 0.11411, 0.11512, 0.11612, 0.11712, 0.11812, 0.11912,

0.12012, 0.12112, 0.12212, 0.12312, 0.12412, 0.12513, 0.12613, 0.12713, 0.12813, 0.12913, 0.13013, 0.13113, 0.13213, 0.13313, 0.13413, 0.13514, 0.13614, 0.13714, 0.13814, 0.13914, 0.14014, 0.14114, 0.14214, 0.14314,

0.14414, 0.14515, 0.14615, 0.14715, 0.14815, 0.14915, 0.15015, 0.15115, 0.15215, 0.15315, 0.15415, 0.15516, 0.15616, 0.15716, 0.15816, 0.15916, 0.16016, 0.16116, 0.16216, 0.16316, 0.16416, 0.16517, 0.16617, 0.16717,

0.16817, 0.16917, 0.17017, 0.17117, 0.17217, 0.17317, 0.17417, 0.17518, 0.17618, 0.17718, 0.17818, 0.17918, 0.18018, 0.18118, 0.18218, 0.18318, 0.18418, 0.18519, 0.18619, 0.18719, 0.18819, 0.18919, 0.19019, 0.19119,

0.19219, 0.19319, 0.19419, 0.1952, 0.1962, 0.1972, 0.1982, 0.1992, 0.2002, 0.2012, 0.2022, 0.2032, 0.2042, 0.20521, 0.20621, 0.20721, 0.20821, 0.20921, 0.21021, 0.21121, 0.21221, 0.21321, 0.21421, 0.21522,

0.21622, 0.21722, 0.21822, 0.21922, 0.22022, 0.22122, 0.22222, 0.22322, 0.22422, 0.22523, 0.22623, 0.22723, 0.22823, 0.22923, 0.23023, 0.23123, 0.23223, 0.23323, 0.23423, 0.23524, 0.23624, 0.23724, 0.23824, 0.23924,

0.24024, 0.24124, 0.24224, 0.24324, 0.24424, 0.24525, 0.24625, 0.24725, 0.24825, 0.24925, 0.25025, 0.25125, 0.25225, 0.25325, 0.25425, 0.25526, 0.25626, 0.25726, 0.25826, 0.25926, 0.26026, 0.26126, 0.26226, 0.26326,

0.26426, 0.26527, 0.26627, 0.26727, 0.26827, 0.26927, 0.27027, 0.27127, 0.27227, 0.27327, 0.27427, 0.27528, 0.27628, 0.27728, 0.27828, 0.27928, 0.28028, 0.28128, 0.28228, 0.28328, 0.28428, 0.28529, 0.28629, 0.28729,

0.28829, 0.28929, 0.29029, 0.29129, 0.29229, 0.29329, 0.29429, 0.2953, 0.2963, 0.2973, 0.2983, 0.2993, 0.3003, 0.3013, 0.3023, 0.3033, 0.3043, 0.30531, 0.30631, 0.30731, 0.30831, 0.30931, 0.31031, 0.31131,

0.31231, 0.31331, 0.31431, 0.31532, 0.31632, 0.31732, 0.31832, 0.31932, 0.32032, 0.32132, 0.32232, 0.32332, 0.32432, 0.32533, 0.32633, 0.32733, 0.32833, 0.32933, 0.33033, 0.33133, 0.33233, 0.33333, 0.33433, 0.33534,

0.33634, 0.33734, 0.33834, 0.33934, 0.34034, 0.34134, 0.34234, 0.34334, 0.34434, 0.34535, 0.34635, 0.34735, 0.34835, 0.34935, 0.35035, 0.35135, 0.35235, 0.35335, 0.35435, 0.35536, 0.35636, 0.35736, 0.35836, 0.35936,

0.36036, 0.36136, 0.36236, 0.36336, 0.36436, 0.36537, 0.36637, 0.36737, 0.36837, 0.36937, 0.37037, 0.37137, 0.37237, 0.37337, 0.37437, 0.37538, 0.37638, 0.37738, 0.37838, 0.37938, 0.38038, 0.38138, 0.38238, 0.38338,

0.38438, 0.38539, 0.38639, 0.38739, 0.38839, 0.38939, 0.39039, 0.39139, 0.39239, 0.39339, 0.39439, 0.3954, 0.3964, 0.3974, 0.3984, 0.3994, 0.4004, 0.4014, 0.4024, 0.4034, 0.4044, 0.40541, 0.40641, 0.40741,

0.40841, 0.40941, 0.41041, 0.41141, 0.41241, 0.41341, 0.41441, 0.41542, 0.41642, 0.41742, 0.41842, 0.41942, 0.42042, 0.42142, 0.42242, 0.42342, 0.42442, 0.42543, 0.42643, 0.42743, 0.42843, 0.42943, 0.43043, 0.43143,

0.43243, 0.43343, 0.43443, 0.43544, 0.43644, 0.43744, 0.43844, 0.43944, 0.44044, 0.44144, 0.44244, 0.44344, 0.44444, 0.44545, 0.44645, 0.44745, 0.44845, 0.44945, 0.45045, 0.45145, 0.45245, 0.45345, 0.45445, 0.45546,

0.45646, 0.45746, 0.45846, 0.45946, 0.46046, 0.46146, 0.46246, 0.46346, 0.46446, 0.46547, 0.46647, 0.46747, 0.46847, 0.46947, 0.47047, 0.47147, 0.47247, 0.47347, 0.47447, 0.47548, 0.47648, 0.47748, 0.47848, 0.47948,

0.48048, 0.48148, 0.48248, 0.48348, 0.48448, 0.48549, 0.48649, 0.48749, 0.48849, 0.48949, 0.49049, 0.49149, 0.49249, 0.49349, 0.49449, 0.4955, 0.4965, 0.4975, 0.4985, 0.4995, 0.5005, 0.5015, 0.5025, 0.5035,

0.5045, 0.50551, 0.50651, 0.50751, 0.50851, 0.50951, 0.51051, 0.51151, 0.51251, 0.51351, 0.51451, 0.51552, 0.51652, 0.51752, 0.51852, 0.51952, 0.52052, 0.52152, 0.52252, 0.52352, 0.52452, 0.52553, 0.52653, 0.52753,

0.52853, 0.52953, 0.53053, 0.53153, 0.53253, 0.53353, 0.53453, 0.53554, 0.53654, 0.53754, 0.53854, 0.53954, 0.54054, 0.54154, 0.54254, 0.54354, 0.54454, 0.54555, 0.54655, 0.54755, 0.54855, 0.54955, 0.55055, 0.55155,

0.55255, 0.55355, 0.55455, 0.55556, 0.55656, 0.55756, 0.55856, 0.55956, 0.56056, 0.56156, 0.56256, 0.56356, 0.56456, 0.56557, 0.56657, 0.56757, 0.56857, 0.56957, 0.57057, 0.57157, 0.57257, 0.57357, 0.57457, 0.57558,

0.57658, 0.57758, 0.57858, 0.57958, 0.58058, 0.58158, 0.58258, 0.58358, 0.58458, 0.58559, 0.58659, 0.58759, 0.58859, 0.58959, 0.59059, 0.59159, 0.59259, 0.59359, 0.59459, 0.5956, 0.5966, 0.5976, 0.5986, 0.5996,

0.6006, 0.6016, 0.6026, 0.6036, 0.6046, 0.60561, 0.60661, 0.60761, 0.60861, 0.60961, 0.61061, 0.61161, 0.61261, 0.61361, 0.61461, 0.61562, 0.61662, 0.61762, 0.61862, 0.61962, 0.62062, 0.62162, 0.62262, 0.62362,

0.62462, 0.62563, 0.62663, 0.62763, 0.62863, 0.62963, 0.63063, 0.63163, 0.63263, 0.63363, 0.63463, 0.63564, 0.63664, 0.63764, 0.63864, 0.63964, 0.64064, 0.64164, 0.64264, 0.64364, 0.64464, 0.64565, 0.64665, 0.64765,

0.64865, 0.64965, 0.65065, 0.65165, 0.65265, 0.65365, 0.65465, 0.65566, 0.65666, 0.65766, 0.65866, 0.65966, 0.66066, 0.66166, 0.66266, 0.66366, 0.66466, 0.66567, 0.66667, 0.66767, 0.66867, 0.66967, 0.67067, 0.67167,

0.67267, 0.67367, 0.67467, 0.67568, 0.67668, 0.67768, 0.67868, 0.67968, 0.68068, 0.68168, 0.68268, 0.68368, 0.68468, 0.68569, 0.68669, 0.68769, 0.68869, 0.68969, 0.69069, 0.69169, 0.69269, 0.69369, 0.69469, 0.6957,

0.6967, 0.6977, 0.6987, 0.6997, 0.7007, 0.7017, 0.7027, 0.7037, 0.7047, 0.70571, 0.70671, 0.70771, 0.70871, 0.70971, 0.71071, 0.71171, 0.71271, 0.71371, 0.71471, 0.71572, 0.71672, 0.71772, 0.71872, 0.71972,

0.72072, 0.72172, 0.72272, 0.72372, 0.72472, 0.72573, 0.72673, 0.72773, 0.72873, 0.72973, 0.73073, 0.73173, 0.73273, 0.73373, 0.73473, 0.73574, 0.73674, 0.73774, 0.73874, 0.73974, 0.74074, 0.74174, 0.74274, 0.74374,

0.74474, 0.74575, 0.74675, 0.74775, 0.74875, 0.74975, 0.75075, 0.75175, 0.75275, 0.75375, 0.75475, 0.75576, 0.75676, 0.75776, 0.75876, 0.75976, 0.76076, 0.76176, 0.76276, 0.76376, 0.76476, 0.76577, 0.76677, 0.76777,

0.76877, 0.76977, 0.77077, 0.77177, 0.77277, 0.77377, 0.77477, 0.77578, 0.77678, 0.77778, 0.77878, 0.77978, 0.78078, 0.78178, 0.78278, 0.78378, 0.78478, 0.78579, 0.78679, 0.78779, 0.78879, 0.78979, 0.79079, 0.79179,

0.79279, 0.79379, 0.79479, 0.7958, 0.7968, 0.7978, 0.7988, 0.7998, 0.8008, 0.8018, 0.8028, 0.8038, 0.8048, 0.80581, 0.80681, 0.80781, 0.80881, 0.80981, 0.81081, 0.81181, 0.81281, 0.81381, 0.81481, 0.81582,

0.81682, 0.81782, 0.81882, 0.81982, 0.82082, 0.82182, 0.82282, 0.82382, 0.82482, 0.82583, 0.82683, 0.82783, 0.82883, 0.82983, 0.83083, 0.83183, 0.83283, 0.83383, 0.83483, 0.83584, 0.83684, 0.83784, 0.83884, 0.83984,

0.84084, 0.84184, 0.84284, 0.84384, 0.84484, 0.84585, 0.84685, 0.84785, 0.84885, 0.84985, 0.85085, 0.85185, 0.85285, 0.85385, 0.85485, 0.85586, 0.85686, 0.85786, 0.85886, 0.85986, 0.86086, 0.86186, 0.86286, 0.86386,

0.86486, 0.86587, 0.86687, 0.86787, 0.86887, 0.86987, 0.87087, 0.87187, 0.87287, 0.87387, 0.87487, 0.87588, 0.87688, 0.87788, 0.87888, 0.87988, 0.88088, 0.88188, 0.88288, 0.88388, 0.88488, 0.88589, 0.88689, 0.88789,

0.88889, 0.88989, 0.89089, 0.89189, 0.89289, 0.89389, 0.89489, 0.8959, 0.8969, 0.8979, 0.8989, 0.8999, 0.9009, 0.9019, 0.9029, 0.9039, 0.9049, 0.90591, 0.90691, 0.90791, 0.90891, 0.90991, 0.91091, 0.91191,

0.91291, 0.91391, 0.91491, 0.91592, 0.91692, 0.91792, 0.91892, 0.91992, 0.92092, 0.92192, 0.92292, 0.92392, 0.92492, 0.92593, 0.92693, 0.92793, 0.92893, 0.92993, 0.93093, 0.93193, 0.93293, 0.93393, 0.93493, 0.93594,

0.93694, 0.93794, 0.93894, 0.93994, 0.94094, 0.94194, 0.94294, 0.94394, 0.94494, 0.94595, 0.94695, 0.94795, 0.94895, 0.94995, 0.95095, 0.95195, 0.95295, 0.95395, 0.95495, 0.95596, 0.95696, 0.95796, 0.95896, 0.95996,

0.96096, 0.96196, 0.96296, 0.96396, 0.96496, 0.96597, 0.96697, 0.96797, 0.96897, 0.96997, 0.97097, 0.97197, 0.97297, 0.97397, 0.97497, 0.97598, 0.97698, 0.97798, 0.97898, 0.97998, 0.98098, 0.98198, 0.98298, 0.98398,

0.98498, 0.98599, 0.98699, 0.98799, 0.98899, 0.98999, 0.99099, 0.99199, 0.99299, 0.99399, 0.99499, 0.996, 0.997, 0.998, 0.999, 1]), array([[ 0.015357, 0.015357, 0.020852, 0.027857, 0.032791, 0.041947, 0.067011, 0.12393, 0.20001, 0.25667, 0.2971, 0.32484, 0.35085, 0.37583, 0.39609, 0.41372, 0.43373, 0.44523, 0.45782, 0.47059, 0.48386, 0.49506, 0.50345,

0.51362, 0.52509, 0.53364, 0.54213, 0.5496, 0.55768, 0.56569, 0.57392, 0.58055, 0.58735, 0.59363, 0.59783, 0.60445, 0.60935, 0.61506, 0.61927, 0.62085, 0.62716, 0.63073, 0.63471, 0.64376, 0.64805, 0.65499,

0.65767, 0.66311, 0.6674, 0.67059, 0.67548, 0.67961, 0.68251, 0.68534, 0.69003, 0.6919, 0.69584, 0.69761, 0.70242, 0.70519, 0.70735, 0.71072, 0.71345, 0.71462, 0.71676, 0.71911, 0.71947, 0.72247, 0.7243,

0.72689, 0.72934, 0.72908, 0.73007, 0.73072, 0.73441, 0.73534, 0.73861, 0.74065, 0.74373, 0.74612, 0.74653, 0.74787, 0.74976, 0.75162, 0.75216, 0.75434, 0.75783, 0.75937, 0.76074, 0.7617, 0.76308, 0.76547,

0.76735, 0.77043, 0.77425, 0.77591, 0.77675, 0.77751, 0.77824, 0.77913, 0.78095, 0.78147, 0.7823, 0.78279, 0.78409, 0.78478, 0.78518, 0.78653, 0.78569, 0.78642, 0.78733, 0.78688, 0.78729, 0.78796, 0.78942,

0.7906, 0.79121, 0.79424, 0.79439, 0.79501, 0.79555, 0.79636, 0.79681, 0.79782, 0.79855, 0.79899, 0.79963, 0.80028, 0.80075, 0.80158, 0.80247, 0.8029, 0.80522, 0.80586, 0.80651, 0.80742, 0.8076, 0.80779,

0.80798, 0.80901, 0.81047, 0.81123, 0.81344, 0.8148, 0.81538, 0.81577, 0.81538, 0.81664, 0.81752, 0.81754, 0.81586, 0.81625, 0.81751, 0.81916, 0.81961, 0.82007, 0.82053, 0.82089, 0.82125, 0.82153, 0.8218,

0.82206, 0.8232, 0.82458, 0.82478, 0.82497, 0.82517, 0.82536, 0.82606, 0.82658, 0.82703, 0.82756, 0.82803, 0.82843, 0.82928, 0.82856, 0.82925, 0.82959, 0.82994, 0.83035, 0.83077, 0.83268, 0.83298, 0.83327,

0.83439, 0.83493, 0.83594, 0.83621, 0.83648, 0.83765, 0.83818, 0.83807, 0.83757, 0.83654, 0.83643, 0.83752, 0.83766, 0.8378, 0.83794, 0.83808, 0.83822, 0.83864, 0.83912, 0.8393, 0.83948, 0.83966, 0.83984,

0.84081, 0.84348, 0.84379, 0.84411, 0.84346, 0.84251, 0.84192, 0.84212, 0.84231, 0.8425, 0.84269, 0.84453, 0.8456, 0.84591, 0.84622, 0.84741, 0.848, 0.84815, 0.8483, 0.84844, 0.84859, 0.84874, 0.84878,

0.8482, 0.84861, 0.84958, 0.84994, 0.8503, 0.85079, 0.85116, 0.85093, 0.85071, 0.85048, 0.85026, 0.85004, 0.84632, 0.84524, 0.8455, 0.84576, 0.84603, 0.84556, 0.84493, 0.84618, 0.84767, 0.84907, 0.84962,

0.84996, 0.85031, 0.85066, 0.85102, 0.85049, 0.85017, 0.84953, 0.84827, 0.84763, 0.84692, 0.84591, 0.84701, 0.84725, 0.8475, 0.84777, 0.84815, 0.84854, 0.84954, 0.85115, 0.85145, 0.85156, 0.85168, 0.8518,

0.85192, 0.85204, 0.85216, 0.85245, 0.85299, 0.85202, 0.85077, 0.85103, 0.8513, 0.85157, 0.85205, 0.85255, 0.8528, 0.85305, 0.85329, 0.85383, 0.85507, 0.85628, 0.85654, 0.85681, 0.85707, 0.85727, 0.85744,

0.85761, 0.85778, 0.85795, 0.85828, 0.85896, 0.8594, 0.85982, 0.86026, 0.86072, 0.86096, 0.8611, 0.86124, 0.86138, 0.86152, 0.86165, 0.86179, 0.86259, 0.86212, 0.86165, 0.86254, 0.86299, 0.86344, 0.8638,

0.86417, 0.86446, 0.8647, 0.86493, 0.86516, 0.86564, 0.86621, 0.86607, 0.86592, 0.86578, 0.86564, 0.8655, 0.86536, 0.86522, 0.86507, 0.86597, 0.8662, 0.86643, 0.86667, 0.86693, 0.86785, 0.86799, 0.86813,

0.86828, 0.86842, 0.86856, 0.8687, 0.86781, 0.8682, 0.86802, 0.86785, 0.86767, 0.8675, 0.86733, 0.86715, 0.86698, 0.86773, 0.86652, 0.86596, 0.86543, 0.866, 0.86653, 0.86699, 0.86739, 0.86761, 0.86784,

0.86806, 0.86828, 0.86785, 0.86734, 0.86673, 0.86597, 0.86456, 0.86473, 0.86491, 0.86509, 0.86527, 0.86544, 0.86565, 0.86585, 0.86606, 0.86626, 0.86646, 0.86663, 0.8668, 0.86697, 0.86714, 0.86732, 0.86737,

0.86726, 0.86715, 0.86704, 0.86693, 0.86682, 0.86671, 0.8666, 0.86649, 0.86638, 0.86627, 0.86616, 0.86585, 0.86548, 0.86512, 0.86532, 0.86557, 0.86515, 0.86473, 0.86626, 0.86506, 0.86601, 0.8663, 0.8666,

0.86689, 0.86705, 0.86722, 0.86738, 0.86754, 0.8677, 0.86786, 0.86804, 0.86822, 0.86839, 0.86857, 0.86875, 0.87082, 0.87062, 0.87041, 0.87021, 0.87001, 0.8698, 0.8696, 0.86984, 0.87017, 0.8705, 0.87119,

0.86966, 0.86803, 0.86712, 0.86641, 0.86666, 0.86699, 0.86732, 0.86711, 0.86683, 0.86655, 0.86627, 0.8662, 0.8669, 0.86543, 0.86444, 0.86455, 0.86467, 0.86478, 0.86489, 0.865, 0.86511, 0.86522, 0.86534,

0.86547, 0.86569, 0.86592, 0.86615, 0.86637, 0.86741, 0.86499, 0.86469, 0.86456, 0.86444, 0.86431, 0.86418, 0.86406, 0.86393, 0.8638, 0.86368, 0.86355, 0.86419, 0.86537, 0.86559, 0.86569, 0.86579, 0.86589,

0.86599, 0.8661, 0.8662, 0.8663, 0.8664, 0.8665, 0.86592, 0.86528, 0.86348, 0.86358, 0.86388, 0.86419, 0.86449, 0.86467, 0.86483, 0.86498, 0.86514, 0.8653, 0.86545, 0.86517, 0.86419, 0.86408, 0.86398,

0.86387, 0.86377, 0.86366, 0.86356, 0.86345, 0.86335, 0.86324, 0.86314, 0.86303, 0.86293, 0.86172, 0.86115, 0.86071, 0.86028, 0.86008, 0.85994, 0.8598, 0.85967, 0.85953, 0.85939, 0.85925, 0.85911, 0.85897,

0.85884, 0.85854, 0.85825, 0.85795, 0.85766, 0.85729, 0.85682, 0.85634, 0.85664, 0.85751, 0.8581, 0.85769, 0.85728, 0.85686, 0.85698, 0.85719, 0.8574, 0.8576, 0.85781, 0.85874, 0.85819, 0.85753, 0.85822,

0.85892, 0.85976, 0.86136, 0.86174, 0.8619, 0.86205, 0.8622, 0.86236, 0.86251, 0.86264, 0.86254, 0.86244, 0.86234, 0.86224, 0.86214, 0.86204, 0.86194, 0.86184, 0.86174, 0.86164, 0.86154, 0.86143, 0.86133,

0.86211, 0.86181, 0.86124, 0.85964, 0.85816, 0.85787, 0.85758, 0.85729, 0.857, 0.85726, 0.85738, 0.85667, 0.85613, 0.85568, 0.85523, 0.85377, 0.85398, 0.85419, 0.8544, 0.85461, 0.85447, 0.85408, 0.85369,

0.8533, 0.85167, 0.85142, 0.85117, 0.85092, 0.85067, 0.8504, 0.85009, 0.84978, 0.84946, 0.84915, 0.84989, 0.85023, 0.85036, 0.8505, 0.85064, 0.85077, 0.85091, 0.85104, 0.85114, 0.8507, 0.85026, 0.84982,

0.84819, 0.84763, 0.84708, 0.84627, 0.84526, 0.8437, 0.8412, 0.84186, 0.84207, 0.84167, 0.84126, 0.84086, 0.8395, 0.83949, 0.83964, 0.83979, 0.83994, 0.84009, 0.84024, 0.84039, 0.8383, 0.83571, 0.83333,

0.83298, 0.83224, 0.83166, 0.83134, 0.83176, 0.83218, 0.83126, 0.82997, 0.82971, 0.83027, 0.82947, 0.82737, 0.82352, 0.82229, 0.82156, 0.81907, 0.81686, 0.81634, 0.81582, 0.81423, 0.81333, 0.81276, 0.81099,

0.81033, 0.80984, 0.80934, 0.80857, 0.80775, 0.80685, 0.80583, 0.80545, 0.80517, 0.80489, 0.80461, 0.80433, 0.80256, 0.79997, 0.79723, 0.79631, 0.79615, 0.79599, 0.79583, 0.79567, 0.79551, 0.79535, 0.79519,

0.79503, 0.79487, 0.79437, 0.79336, 0.79252, 0.79172, 0.78968, 0.78833, 0.78788, 0.78743, 0.78697, 0.78332, 0.7827, 0.78208, 0.78166, 0.78126, 0.78086, 0.78046, 0.77918, 0.77656, 0.77511, 0.77431, 0.77056,

0.76964, 0.76876, 0.76797, 0.76723, 0.76661, 0.766, 0.76633, 0.76659, 0.7663, 0.76601, 0.76573, 0.76544, 0.76515, 0.76556, 0.76611, 0.76563, 0.76513, 0.76462, 0.76201, 0.75959, 0.75838, 0.7556, 0.75451,

0.75303, 0.7522, 0.75155, 0.74999, 0.74641, 0.74486, 0.74255, 0.74042, 0.7389, 0.73846, 0.73801, 0.73757, 0.73756, 0.73807, 0.73477, 0.73223, 0.73094, 0.73046, 0.72998, 0.72765, 0.72364, 0.72143, 0.71897,

0.7148, 0.71166, 0.71026, 0.70774, 0.70673, 0.70454, 0.69873, 0.69727, 0.69435, 0.69204, 0.68778, 0.68349, 0.68234, 0.67998, 0.67931, 0.67865, 0.67747, 0.67535, 0.67185, 0.66978, 0.6689, 0.66712, 0.66606,

0.66531, 0.66418, 0.66067, 0.66008, 0.6595, 0.65891, 0.65639, 0.65365, 0.6519, 0.65058, 0.64987, 0.64917, 0.648, 0.64669, 0.64547, 0.64238, 0.641, 0.63898, 0.63793, 0.63688, 0.63024, 0.62816, 0.62699,

0.62365, 0.62018, 0.61942, 0.61865, 0.61701, 0.61373, 0.60982, 0.60763, 0.60581, 0.60457, 0.59954, 0.59784, 0.59686, 0.59622, 0.59559, 0.59355, 0.58841, 0.5833, 0.58117, 0.57948, 0.57615, 0.56727, 0.56567,

0.56466, 0.56176, 0.55862, 0.55496, 0.54978, 0.5466, 0.54505, 0.54317, 0.53758, 0.53548, 0.53314, 0.53101, 0.52904, 0.52424, 0.52045, 0.51857, 0.5171, 0.51589, 0.51329, 0.51139, 0.50952, 0.50866, 0.50386,

0.50125, 0.5003, 0.49935, 0.49767, 0.49482, 0.49248, 0.48103, 0.47824, 0.47672, 0.47448, 0.47, 0.46102, 0.45964, 0.45732, 0.45353, 0.44743, 0.44581, 0.44326, 0.43798, 0.42991, 0.42405, 0.4217, 0.41154,

0.40619, 0.40376, 0.40103, 0.3981, 0.39352, 0.39209, 0.38822, 0.38559, 0.37827, 0.37092, 0.35879, 0.3561, 0.35236, 0.34891, 0.34318, 0.33453, 0.33187, 0.32963, 0.32298, 0.31746, 0.31173, 0.30559, 0.29812,

0.29531, 0.29309, 0.29066, 0.28689, 0.27999, 0.2704, 0.2623, 0.25752, 0.24308, 0.23459, 0.23267, 0.22715, 0.21591, 0.2062, 0.19951, 0.1936, 0.19174, 0.18899, 0.18364, 0.1806, 0.17045, 0.16718, 0.16445,

0.16289, 0.16134, 0.15955, 0.15768, 0.14006, 0.12928, 0.11929, 0.11476, 0.11234, 0.10339, 0.099627, 0.097329, 0.094506, 0.087775, 0.086626, 0.085474, 0.084322, 0.081972, 0.075662, 0.073084, 0.066207, 0.06194, 0.059482,

0.056092, 0.050715, 0.047541, 0.039899, 0.037918, 0.037081, 0.036243, 0.035404, 0.034565, 0.032412, 0.030095, 0.029216, 0.028336, 0.027455, 0.026574, 0.025597, 0.024348, 0.023097, 0.021844, 0.020925, 0.020068, 0.01921, 0.018352,

0.017493, 0.01356, 0.011892, 0.010592, 0.0092897, 0.007189, 0.004453, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]]), 'Confidence', 'F1'], [array([ 0, 0.001001, 0.002002, 0.003003, 0.004004, 0.005005, 0.006006, 0.007007, 0.008008, 0.009009, 0.01001, 0.011011, 0.012012, 0.013013, 0.014014, 0.015015, 0.016016, 0.017017, 0.018018, 0.019019, 0.02002, 0.021021, 0.022022, 0.023023,

0.024024, 0.025025, 0.026026, 0.027027, 0.028028, 0.029029, 0.03003, 0.031031, 0.032032, 0.033033, 0.034034, 0.035035, 0.036036, 0.037037, 0.038038, 0.039039, 0.04004, 0.041041, 0.042042, 0.043043, 0.044044, 0.045045, 0.046046, 0.047047,

0.048048, 0.049049, 0.05005, 0.051051, 0.052052, 0.053053, 0.054054, 0.055055, 0.056056, 0.057057, 0.058058, 0.059059, 0.06006, 0.061061, 0.062062, 0.063063, 0.064064, 0.065065, 0.066066, 0.067067, 0.068068, 0.069069, 0.07007, 0.071071,

0.072072, 0.073073, 0.074074, 0.075075, 0.076076, 0.077077, 0.078078, 0.079079, 0.08008, 0.081081, 0.082082, 0.083083, 0.084084, 0.085085, 0.086086, 0.087087, 0.088088, 0.089089, 0.09009, 0.091091, 0.092092, 0.093093, 0.094094, 0.095095,

0.096096, 0.097097, 0.098098, 0.099099, 0.1001, 0.1011, 0.1021, 0.1031, 0.1041, 0.10511, 0.10611, 0.10711, 0.10811, 0.10911, 0.11011, 0.11111, 0.11211, 0.11311, 0.11411, 0.11512, 0.11612, 0.11712, 0.11812, 0.11912,

0.12012, 0.12112, 0.12212, 0.12312, 0.12412, 0.12513, 0.12613, 0.12713, 0.12813, 0.12913, 0.13013, 0.13113, 0.13213, 0.13313, 0.13413, 0.13514, 0.13614, 0.13714, 0.13814, 0.13914, 0.14014, 0.14114, 0.14214, 0.14314,

0.14414, 0.14515, 0.14615, 0.14715, 0.14815, 0.14915, 0.15015, 0.15115, 0.15215, 0.15315, 0.15415, 0.15516, 0.15616, 0.15716, 0.15816, 0.15916, 0.16016, 0.16116, 0.16216, 0.16316, 0.16416, 0.16517, 0.16617, 0.16717,

0.16817, 0.16917, 0.17017, 0.17117, 0.17217, 0.17317, 0.17417, 0.17518, 0.17618, 0.17718, 0.17818, 0.17918, 0.18018, 0.18118, 0.18218, 0.18318, 0.18418, 0.18519, 0.18619, 0.18719, 0.18819, 0.18919, 0.19019, 0.19119,

0.19219, 0.19319, 0.19419, 0.1952, 0.1962, 0.1972, 0.1982, 0.1992, 0.2002, 0.2012, 0.2022, 0.2032, 0.2042, 0.20521, 0.20621, 0.20721, 0.20821, 0.20921, 0.21021, 0.21121, 0.21221, 0.21321, 0.21421, 0.21522,

0.21622, 0.21722, 0.21822, 0.21922, 0.22022, 0.22122, 0.22222, 0.22322, 0.22422, 0.22523, 0.22623, 0.22723, 0.22823, 0.22923, 0.23023, 0.23123, 0.23223, 0.23323, 0.23423, 0.23524, 0.23624, 0.23724, 0.23824, 0.23924,

0.24024, 0.24124, 0.24224, 0.24324, 0.24424, 0.24525, 0.24625, 0.24725, 0.24825, 0.24925, 0.25025, 0.25125, 0.25225, 0.25325, 0.25425, 0.25526, 0.25626, 0.25726, 0.25826, 0.25926, 0.26026, 0.26126, 0.26226, 0.26326,

0.26426, 0.26527, 0.26627, 0.26727, 0.26827, 0.26927, 0.27027, 0.27127, 0.27227, 0.27327, 0.27427, 0.27528, 0.27628, 0.27728, 0.27828, 0.27928, 0.28028, 0.28128, 0.28228, 0.28328, 0.28428, 0.28529, 0.28629, 0.28729,

0.28829, 0.28929, 0.29029, 0.29129, 0.29229, 0.29329, 0.29429, 0.2953, 0.2963, 0.2973, 0.2983, 0.2993, 0.3003, 0.3013, 0.3023, 0.3033, 0.3043, 0.30531, 0.30631, 0.30731, 0.30831, 0.30931, 0.31031, 0.31131,

0.31231, 0.31331, 0.31431, 0.31532, 0.31632, 0.31732, 0.31832, 0.31932, 0.32032, 0.32132, 0.32232, 0.32332, 0.32432, 0.32533, 0.32633, 0.32733, 0.32833, 0.32933, 0.33033, 0.33133, 0.33233, 0.33333, 0.33433, 0.33534,

0.33634, 0.33734, 0.33834, 0.33934, 0.34034, 0.34134, 0.34234, 0.34334, 0.34434, 0.34535, 0.34635, 0.34735, 0.34835, 0.34935, 0.35035, 0.35135, 0.35235, 0.35335, 0.35435, 0.35536, 0.35636, 0.35736, 0.35836, 0.35936,

0.36036, 0.36136, 0.36236, 0.36336, 0.36436, 0.36537, 0.36637, 0.36737, 0.36837, 0.36937, 0.37037, 0.37137, 0.37237, 0.37337, 0.37437, 0.37538, 0.37638, 0.37738, 0.37838, 0.37938, 0.38038, 0.38138, 0.38238, 0.38338,

0.38438, 0.38539, 0.38639, 0.38739, 0.38839, 0.38939, 0.39039, 0.39139, 0.39239, 0.39339, 0.39439, 0.3954, 0.3964, 0.3974, 0.3984, 0.3994, 0.4004, 0.4014, 0.4024, 0.4034, 0.4044, 0.40541, 0.40641, 0.40741,

0.40841, 0.40941, 0.41041, 0.41141, 0.41241, 0.41341, 0.41441, 0.41542, 0.41642, 0.41742, 0.41842, 0.41942, 0.42042, 0.42142, 0.42242, 0.42342, 0.42442, 0.42543, 0.42643, 0.42743, 0.42843, 0.42943, 0.43043, 0.43143,

0.43243, 0.43343, 0.43443, 0.43544, 0.43644, 0.43744, 0.43844, 0.43944, 0.44044, 0.44144, 0.44244, 0.44344, 0.44444, 0.44545, 0.44645, 0.44745, 0.44845, 0.44945, 0.45045, 0.45145, 0.45245, 0.45345, 0.45445, 0.45546,

0.45646, 0.45746, 0.45846, 0.45946, 0.46046, 0.46146, 0.46246, 0.46346, 0.46446, 0.46547, 0.46647, 0.46747, 0.46847, 0.46947, 0.47047, 0.47147, 0.47247, 0.47347, 0.47447, 0.47548, 0.47648, 0.47748, 0.47848, 0.47948,

0.48048, 0.48148, 0.48248, 0.48348, 0.48448, 0.48549, 0.48649, 0.48749, 0.48849, 0.48949, 0.49049, 0.49149, 0.49249, 0.49349, 0.49449, 0.4955, 0.4965, 0.4975, 0.4985, 0.4995, 0.5005, 0.5015, 0.5025, 0.5035,

0.5045, 0.50551, 0.50651, 0.50751, 0.50851, 0.50951, 0.51051, 0.51151, 0.51251, 0.51351, 0.51451, 0.51552, 0.51652, 0.51752, 0.51852, 0.51952, 0.52052, 0.52152, 0.52252, 0.52352, 0.52452, 0.52553, 0.52653, 0.52753,

0.52853, 0.52953, 0.53053, 0.53153, 0.53253, 0.53353, 0.53453, 0.53554, 0.53654, 0.53754, 0.53854, 0.53954, 0.54054, 0.54154, 0.54254, 0.54354, 0.54454, 0.54555, 0.54655, 0.54755, 0.54855, 0.54955, 0.55055, 0.55155,

0.55255, 0.55355, 0.55455, 0.55556, 0.55656, 0.55756, 0.55856, 0.55956, 0.56056, 0.56156, 0.56256, 0.56356, 0.56456, 0.56557, 0.56657, 0.56757, 0.56857, 0.56957, 0.57057, 0.57157, 0.57257, 0.57357, 0.57457, 0.57558,

0.57658, 0.57758, 0.57858, 0.57958, 0.58058, 0.58158, 0.58258, 0.58358, 0.58458, 0.58559, 0.58659, 0.58759, 0.58859, 0.58959, 0.59059, 0.59159, 0.59259, 0.59359, 0.59459, 0.5956, 0.5966, 0.5976, 0.5986, 0.5996,

0.6006, 0.6016, 0.6026, 0.6036, 0.6046, 0.60561, 0.60661, 0.60761, 0.60861, 0.60961, 0.61061, 0.61161, 0.61261, 0.61361, 0.61461, 0.61562, 0.61662, 0.61762, 0.61862, 0.61962, 0.62062, 0.62162, 0.62262, 0.62362,

0.62462, 0.62563, 0.62663, 0.62763, 0.62863, 0.62963, 0.63063, 0.63163, 0.63263, 0.63363, 0.63463, 0.63564, 0.63664, 0.63764, 0.63864, 0.63964, 0.64064, 0.64164, 0.64264, 0.64364, 0.64464, 0.64565, 0.64665, 0.64765,

0.64865, 0.64965, 0.65065, 0.65165, 0.65265, 0.65365, 0.65465, 0.65566, 0.65666, 0.65766, 0.65866, 0.65966, 0.66066, 0.66166, 0.66266, 0.66366, 0.66466, 0.66567, 0.66667, 0.66767, 0.66867, 0.66967, 0.67067, 0.67167,

0.67267, 0.67367, 0.67467, 0.67568, 0.67668, 0.67768, 0.67868, 0.67968, 0.68068, 0.68168, 0.68268, 0.68368, 0.68468, 0.68569, 0.68669, 0.68769, 0.68869, 0.68969, 0.69069, 0.69169, 0.69269, 0.69369, 0.69469, 0.6957,

0.6967, 0.6977, 0.6987, 0.6997, 0.7007, 0.7017, 0.7027, 0.7037, 0.7047, 0.70571, 0.70671, 0.70771, 0.70871, 0.70971, 0.71071, 0.71171, 0.71271, 0.71371, 0.71471, 0.71572, 0.71672, 0.71772, 0.71872, 0.71972,

0.72072, 0.72172, 0.72272, 0.72372, 0.72472, 0.72573, 0.72673, 0.72773, 0.72873, 0.72973, 0.73073, 0.73173, 0.73273, 0.73373, 0.73473, 0.73574, 0.73674, 0.73774, 0.73874, 0.73974, 0.74074, 0.74174, 0.74274, 0.74374,

0.74474, 0.74575, 0.74675, 0.74775, 0.74875, 0.74975, 0.75075, 0.75175, 0.75275, 0.75375, 0.75475, 0.75576, 0.75676, 0.75776, 0.75876, 0.75976, 0.76076, 0.76176, 0.76276, 0.76376, 0.76476, 0.76577, 0.76677, 0.76777,

0.76877, 0.76977, 0.77077, 0.77177, 0.77277, 0.77377, 0.77477, 0.77578, 0.77678, 0.77778, 0.77878, 0.77978, 0.78078, 0.78178, 0.78278, 0.78378, 0.78478, 0.78579, 0.78679, 0.78779, 0.78879, 0.78979, 0.79079, 0.79179,

0.79279, 0.79379, 0.79479, 0.7958, 0.7968, 0.7978, 0.7988, 0.7998, 0.8008, 0.8018, 0.8028, 0.8038, 0.8048, 0.80581, 0.80681, 0.80781, 0.80881, 0.80981, 0.81081, 0.81181, 0.81281, 0.81381, 0.81481, 0.81582,

0.81682, 0.81782, 0.81882, 0.81982, 0.82082, 0.82182, 0.82282, 0.82382, 0.82482, 0.82583, 0.82683, 0.82783, 0.82883, 0.82983, 0.83083, 0.83183, 0.83283, 0.83383, 0.83483, 0.83584, 0.83684, 0.83784, 0.83884, 0.83984,

0.84084, 0.84184, 0.84284, 0.84384, 0.84484, 0.84585, 0.84685, 0.84785, 0.84885, 0.84985, 0.85085, 0.85185, 0.85285, 0.85385, 0.85485, 0.85586, 0.85686, 0.85786, 0.85886, 0.85986, 0.86086, 0.86186, 0.86286, 0.86386,

0.86486, 0.86587, 0.86687, 0.86787, 0.86887, 0.86987, 0.87087, 0.87187, 0.87287, 0.87387, 0.87487, 0.87588, 0.87688, 0.87788, 0.87888, 0.87988, 0.88088, 0.88188, 0.88288, 0.88388, 0.88488, 0.88589, 0.88689, 0.88789,

0.88889, 0.88989, 0.89089, 0.89189, 0.89289, 0.89389, 0.89489, 0.8959, 0.8969, 0.8979, 0.8989, 0.8999, 0.9009, 0.9019, 0.9029, 0.9039, 0.9049, 0.90591, 0.90691, 0.90791, 0.90891, 0.90991, 0.91091, 0.91191,

0.91291, 0.91391, 0.91491, 0.91592, 0.91692, 0.91792, 0.91892, 0.91992, 0.92092, 0.92192, 0.92292, 0.92392, 0.92492, 0.92593, 0.92693, 0.92793, 0.92893, 0.92993, 0.93093, 0.93193, 0.93293, 0.93393, 0.93493, 0.93594,

0.93694, 0.93794, 0.93894, 0.93994, 0.94094, 0.94194, 0.94294, 0.94394, 0.94494, 0.94595, 0.94695, 0.94795, 0.94895, 0.94995, 0.95095, 0.95195, 0.95295, 0.95395, 0.95495, 0.95596, 0.95696, 0.95796, 0.95896, 0.95996,

0.96096, 0.96196, 0.96296, 0.96396, 0.96496, 0.96597, 0.96697, 0.96797, 0.96897, 0.96997, 0.97097, 0.97197, 0.97297, 0.97397, 0.97497, 0.97598, 0.97698, 0.97798, 0.97898, 0.97998, 0.98098, 0.98198, 0.98298, 0.98398,

0.98498, 0.98599, 0.98699, 0.98799, 0.98899, 0.98999, 0.99099, 0.99199, 0.99299, 0.99399, 0.99499, 0.996, 0.997, 0.998, 0.999, 1]), array([[ 0.0077381, 0.0077381, 0.010536, 0.014126, 0.016671, 0.021427, 0.034683, 0.066129, 0.11137, 0.14782, 0.17529, 0.19494, 0.21397, 0.23285, 0.24861, 0.26282, 0.27918, 0.28898, 0.29967, 0.31072, 0.32263, 0.33267, 0.34056,

0.34994, 0.36068, 0.36912, 0.37763, 0.38491, 0.39289, 0.40088, 0.40919, 0.41598, 0.42299, 0.42997, 0.43439, 0.44142, 0.44666, 0.45283, 0.45791, 0.45964, 0.46659, 0.47056, 0.47499, 0.48521, 0.49067, 0.49868,

0.50178, 0.50815, 0.5132, 0.51698, 0.52282, 0.52778, 0.53197, 0.53612, 0.54188, 0.54419, 0.54908, 0.55203, 0.55884, 0.56236, 0.56511, 0.56942, 0.57293, 0.57525, 0.57803, 0.5811, 0.5824, 0.58634, 0.58962,

0.59394, 0.59811, 0.59866, 0.59999, 0.60087, 0.60587, 0.60714, 0.61161, 0.61441, 0.61867, 0.62199, 0.62255, 0.62442, 0.62706, 0.62966, 0.63142, 0.63451, 0.63947, 0.64165, 0.64361, 0.64499, 0.64697, 0.65041,

0.65313, 0.6576, 0.66319, 0.66598, 0.668, 0.66913, 0.67021, 0.67152, 0.67424, 0.67502, 0.67625, 0.67699, 0.67893, 0.67997, 0.68057, 0.6826, 0.68252, 0.68363, 0.685, 0.68552, 0.68614, 0.68715, 0.68939,

0.69119, 0.69212, 0.69731, 0.69826, 0.69921, 0.70132, 0.70258, 0.70327, 0.70485, 0.70599, 0.70668, 0.70768, 0.7087, 0.70943, 0.71074, 0.71215, 0.71283, 0.71648, 0.71884, 0.71988, 0.72132, 0.72162, 0.72193,

0.72223, 0.72387, 0.72621, 0.72743, 0.73099, 0.7332, 0.73414, 0.73476, 0.73485, 0.73759, 0.73904, 0.73964, 0.73919, 0.73983, 0.7419, 0.74462, 0.74537, 0.74613, 0.74689, 0.74749, 0.74808, 0.74855, 0.74899,

0.74942, 0.75133, 0.75363, 0.75396, 0.75428, 0.75461, 0.75494, 0.7561, 0.75697, 0.75773, 0.75862, 0.75942, 0.76008, 0.76182, 0.76155, 0.76299, 0.76358, 0.76417, 0.76486, 0.76558, 0.76883, 0.76934, 0.76984,

0.77176, 0.77267, 0.77441, 0.77488, 0.77534, 0.77734, 0.77827, 0.7785, 0.77832, 0.77866, 0.78009, 0.78199, 0.78223, 0.78248, 0.78272, 0.78297, 0.78321, 0.78396, 0.78479, 0.78511, 0.78543, 0.78574, 0.78606,

0.78774, 0.79246, 0.79301, 0.79356, 0.79356, 0.79324, 0.7931, 0.79344, 0.79378, 0.79412, 0.79447, 0.79773, 0.79965, 0.80021, 0.80076, 0.80289, 0.80396, 0.80422, 0.80448, 0.80475, 0.80501, 0.80527, 0.80547,

0.80529, 0.80682, 0.80857, 0.80922, 0.80987, 0.81076, 0.8115, 0.81143, 0.81136, 0.81129, 0.81121, 0.81114, 0.80998, 0.80971, 0.8102, 0.81068, 0.81116, 0.81108, 0.81089, 0.81329, 0.81605, 0.81865, 0.81967,

0.82031, 0.82095, 0.82161, 0.82227, 0.82226, 0.82261, 0.8234, 0.82302, 0.82283, 0.82262, 0.82232, 0.82439, 0.82486, 0.82533, 0.82584, 0.82657, 0.8273, 0.82921, 0.83228, 0.83284, 0.83307, 0.8333, 0.83353,

0.83375, 0.83398, 0.83421, 0.83477, 0.83609, 0.83581, 0.83557, 0.83609, 0.83661, 0.83713, 0.83806, 0.83902, 0.8395, 0.83998, 0.84046, 0.84151, 0.84392, 0.84628, 0.8468, 0.84731, 0.84782, 0.84821, 0.84854,

0.84888, 0.84922, 0.84955, 0.8502, 0.85152, 0.85239, 0.85322, 0.8541, 0.855, 0.85548, 0.85575, 0.85602, 0.8563, 0.85657, 0.85684, 0.85712, 0.85896, 0.85885, 0.85873, 0.86075, 0.86166, 0.86254, 0.86327,

0.86401, 0.86459, 0.86506, 0.86553, 0.866, 0.86694, 0.86813, 0.86809, 0.86806, 0.86803, 0.86799, 0.86796, 0.86793, 0.8679, 0.86786, 0.86983, 0.8703, 0.87077, 0.87124, 0.87178, 0.87364, 0.87392, 0.87421,

0.8745, 0.87478, 0.87507, 0.87535, 0.87534, 0.87892, 0.87888, 0.87884, 0.87881, 0.87877, 0.87873, 0.87869, 0.87865, 0.88059, 0.88033, 0.88021, 0.88013, 0.88132, 0.88242, 0.88338, 0.88421, 0.88467, 0.88513,

0.8856, 0.88606, 0.88601, 0.88591, 0.88578, 0.88562, 0.88544, 0.88581, 0.88618, 0.88656, 0.88693, 0.8873, 0.88772, 0.88816, 0.88859, 0.88902, 0.88944, 0.8898, 0.89016, 0.89052, 0.89088, 0.89124, 0.89145,

0.89142, 0.8914, 0.89138, 0.89136, 0.89134, 0.89132, 0.89129, 0.89127, 0.89125, 0.89123, 0.89121, 0.89115, 0.89107, 0.891, 0.89192, 0.89297, 0.89289, 0.89281, 0.89691, 0.89887, 0.90092, 0.90156, 0.9022,

0.90284, 0.90319, 0.90354, 0.90389, 0.90425, 0.9046, 0.90495, 0.90533, 0.90572, 0.9061, 0.90649, 0.90688, 0.91148, 0.91144, 0.91141, 0.91138, 0.91134, 0.91131, 0.91128, 0.91187, 0.91259, 0.91332, 0.9156,

0.91536, 0.91511, 0.91496, 0.91485, 0.91552, 0.91626, 0.91699, 0.91703, 0.91699, 0.91695, 0.9169, 0.91721, 0.91878, 0.91887, 0.91875, 0.91901, 0.91926, 0.91951, 0.91977, 0.92002, 0.92027, 0.92052, 0.92078,

0.92107, 0.92159, 0.9221, 0.92262, 0.92313, 0.92549, 0.92521, 0.92517, 0.92515, 0.92514, 0.92512, 0.9251, 0.92508, 0.92506, 0.92505, 0.92503, 0.92501, 0.92663, 0.92936, 0.92986, 0.93009, 0.93033, 0.93056,

0.9308, 0.93103, 0.93126, 0.9315, 0.93173, 0.93197, 0.93191, 0.93183, 0.9316, 0.93396, 0.93467, 0.93538, 0.93609, 0.93652, 0.93689, 0.93725, 0.93762, 0.93798, 0.93835, 0.93857, 0.93846, 0.93844, 0.93843,

0.93842, 0.93841, 0.93839, 0.93838, 0.93837, 0.93836, 0.93835, 0.93833, 0.93832, 0.93831, 0.93817, 0.9381, 0.93805, 0.93799, 0.93797, 0.93795, 0.93794, 0.93792, 0.93791, 0.93789, 0.93787, 0.93786, 0.93784,

0.93782, 0.93779, 0.93775, 0.93772, 0.93768, 0.93764, 0.93758, 0.93753, 0.93875, 0.94083, 0.9424, 0.94236, 0.94231, 0.94226, 0.94269, 0.94319, 0.9437, 0.9442, 0.94471, 0.94696, 0.94716, 0.94719, 0.94887,

0.95061, 0.95267, 0.9566, 0.95754, 0.95792, 0.9583, 0.95868, 0.95906, 0.95944, 0.95978, 0.95978, 0.95977, 0.95976, 0.95975, 0.95975, 0.95974, 0.95973, 0.95972, 0.95971, 0.95971, 0.9597, 0.95969, 0.95968,

0.96175, 0.96223, 0.96218, 0.96207, 0.96195, 0.96193, 0.96191, 0.96189, 0.96187, 0.96302, 0.96445, 0.9644, 0.96436, 0.96433, 0.9643, 0.96447, 0.96501, 0.96555, 0.96609, 0.96662, 0.96684, 0.96681, 0.96678,

0.96676, 0.96665, 0.96664, 0.96662, 0.9666, 0.96659, 0.96657, 0.96655, 0.96653, 0.96651, 0.96649, 0.96858, 0.96946, 0.96981, 0.97017, 0.97052, 0.97087, 0.97123, 0.97158, 0.97191, 0.97188, 0.97186, 0.97183,

0.97174, 0.97171, 0.97168, 0.97164, 0.97158, 0.97149, 0.97137, 0.97312, 0.97413, 0.97411, 0.97409, 0.97407, 0.974, 0.97436, 0.97476, 0.97517, 0.97558, 0.97598, 0.97639, 0.9768, 0.97671, 0.97659, 0.97648,

0.9793, 0.97927, 0.97925, 0.97953, 0.98069, 0.98185, 0.98211, 0.98206, 0.98311, 0.98498, 0.98496, 0.98489, 0.98477, 0.98474, 0.98471, 0.98464, 0.98457, 0.98455, 0.98453, 0.98448, 0.98446, 0.98444, 0.98438,

0.98436, 0.98434, 0.98433, 0.98738, 0.98735, 0.98733, 0.9873, 0.9873, 0.98729, 0.98728, 0.98727, 0.98727, 0.98722, 0.98715, 0.98708, 0.98705, 0.98705, 0.98705, 0.98704, 0.98704, 0.98703, 0.98703, 0.98702,

0.98702, 0.98702, 0.987, 0.98698, 0.98695, 0.98693, 0.98688, 0.98684, 0.98683, 0.98681, 0.9868, 0.9867, 0.98668, 0.98667, 0.98666, 0.98665, 0.98663, 0.98662, 0.98659, 0.98651, 0.98647, 0.98645, 0.98635,

0.98632, 0.98629, 0.98627, 0.98625, 0.98623, 0.98621, 0.98819, 0.98962, 0.98961, 0.9896, 0.9896, 0.98959, 0.98958, 0.99112, 0.99298, 0.99302, 0.99302, 0.99301, 0.99297, 0.99293, 0.99292, 0.99288, 0.99286,

0.99284, 0.99282, 0.99281, 0.99279, 0.99274, 0.99271, 0.99268, 0.99264, 0.99262, 0.99261, 0.9926, 0.9926, 0.99362, 0.99628, 0.99625, 0.99623, 0.99622, 0.99622, 0.99622, 0.9962, 0.99616, 0.99615, 0.99612,

0.99609, 0.99606, 0.99605, 0.99603, 0.99602, 0.996, 0.99595, 0.99594, 0.99591, 0.99589, 0.99585, 0.99581, 0.9958, 0.99578, 0.99577, 0.99577, 0.99576, 0.99574, 0.9957, 0.99568, 0.99567, 0.99566, 0.99565,

0.99564, 0.99563, 0.99559, 0.99559, 0.99558, 0.99558, 0.99555, 0.99552, 0.99551, 0.99549, 0.99548, 0.99548, 0.99547, 0.99545, 0.99544, 0.99541, 0.99539, 0.99537, 0.99536, 0.99535, 0.99528, 0.99525, 0.99524,

0.9952, 0.99517, 0.99516, 0.99515, 0.99513, 0.99509, 0.99505, 0.99502, 0.995, 0.99499, 0.99493, 0.99491, 0.99489, 0.99489, 0.99488, 0.99485, 0.99479, 0.99472, 0.9947, 0.99468, 0.99463, 0.99452, 0.99449,

0.99448, 0.99444, 0.9944, 0.99435, 0.99427, 0.99423, 0.99421, 0.99418, 0.9941, 0.99406, 0.99403, 0.994, 0.99397, 0.99389, 0.99383, 0.9938, 0.99378, 0.99376, 0.99372, 0.99368, 0.99365, 0.99761, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]]), 'Confidence', 'Precision'], [array([ 0, 0.001001, 0.002002, 0.003003, 0.004004, 0.005005, 0.006006, 0.007007, 0.008008, 0.009009, 0.01001, 0.011011, 0.012012, 0.013013, 0.014014, 0.015015, 0.016016, 0.017017, 0.018018, 0.019019, 0.02002, 0.021021, 0.022022, 0.023023,

0.024024, 0.025025, 0.026026, 0.027027, 0.028028, 0.029029, 0.03003, 0.031031, 0.032032, 0.033033, 0.034034, 0.035035, 0.036036, 0.037037, 0.038038, 0.039039, 0.04004, 0.041041, 0.042042, 0.043043, 0.044044, 0.045045, 0.046046, 0.047047,

0.048048, 0.049049, 0.05005, 0.051051, 0.052052, 0.053053, 0.054054, 0.055055, 0.056056, 0.057057, 0.058058, 0.059059, 0.06006, 0.061061, 0.062062, 0.063063, 0.064064, 0.065065, 0.066066, 0.067067, 0.068068, 0.069069, 0.07007, 0.071071,

0.072072, 0.073073, 0.074074, 0.075075, 0.076076, 0.077077, 0.078078, 0.079079, 0.08008, 0.081081, 0.082082, 0.083083, 0.084084, 0.085085, 0.086086, 0.087087, 0.088088, 0.089089, 0.09009, 0.091091, 0.092092, 0.093093, 0.094094, 0.095095,

0.096096, 0.097097, 0.098098, 0.099099, 0.1001, 0.1011, 0.1021, 0.1031, 0.1041, 0.10511, 0.10611, 0.10711, 0.10811, 0.10911, 0.11011, 0.11111, 0.11211, 0.11311, 0.11411, 0.11512, 0.11612, 0.11712, 0.11812, 0.11912,

0.12012, 0.12112, 0.12212, 0.12312, 0.12412, 0.12513, 0.12613, 0.12713, 0.12813, 0.12913, 0.13013, 0.13113, 0.13213, 0.13313, 0.13413, 0.13514, 0.13614, 0.13714, 0.13814, 0.13914, 0.14014, 0.14114, 0.14214, 0.14314,

0.14414, 0.14515, 0.14615, 0.14715, 0.14815, 0.14915, 0.15015, 0.15115, 0.15215, 0.15315, 0.15415, 0.15516, 0.15616, 0.15716, 0.15816, 0.15916, 0.16016, 0.16116, 0.16216, 0.16316, 0.16416, 0.16517, 0.16617, 0.16717,

0.16817, 0.16917, 0.17017, 0.17117, 0.17217, 0.17317, 0.17417, 0.17518, 0.17618, 0.17718, 0.17818, 0.17918, 0.18018, 0.18118, 0.18218, 0.18318, 0.18418, 0.18519, 0.18619, 0.18719, 0.18819, 0.18919, 0.19019, 0.19119,

0.19219, 0.19319, 0.19419, 0.1952, 0.1962, 0.1972, 0.1982, 0.1992, 0.2002, 0.2012, 0.2022, 0.2032, 0.2042, 0.20521, 0.20621, 0.20721, 0.20821, 0.20921, 0.21021, 0.21121, 0.21221, 0.21321, 0.21421, 0.21522,

0.21622, 0.21722, 0.21822, 0.21922, 0.22022, 0.22122, 0.22222, 0.22322, 0.22422, 0.22523, 0.22623, 0.22723, 0.22823, 0.22923, 0.23023, 0.23123, 0.23223, 0.23323, 0.23423, 0.23524, 0.23624, 0.23724, 0.23824, 0.23924,

0.24024, 0.24124, 0.24224, 0.24324, 0.24424, 0.24525, 0.24625, 0.24725, 0.24825, 0.24925, 0.25025, 0.25125, 0.25225, 0.25325, 0.25425, 0.25526, 0.25626, 0.25726, 0.25826, 0.25926, 0.26026, 0.26126, 0.26226, 0.26326,

0.26426, 0.26527, 0.26627, 0.26727, 0.26827, 0.26927, 0.27027, 0.27127, 0.27227, 0.27327, 0.27427, 0.27528, 0.27628, 0.27728, 0.27828, 0.27928, 0.28028, 0.28128, 0.28228, 0.28328, 0.28428, 0.28529, 0.28629, 0.28729,

0.28829, 0.28929, 0.29029, 0.29129, 0.29229, 0.29329, 0.29429, 0.2953, 0.2963, 0.2973, 0.2983, 0.2993, 0.3003, 0.3013, 0.3023, 0.3033, 0.3043, 0.30531, 0.30631, 0.30731, 0.30831, 0.30931, 0.31031, 0.31131,

0.31231, 0.31331, 0.31431, 0.31532, 0.31632, 0.31732, 0.31832, 0.31932, 0.32032, 0.32132, 0.32232, 0.32332, 0.32432, 0.32533, 0.32633, 0.32733, 0.32833, 0.32933, 0.33033, 0.33133, 0.33233, 0.33333, 0.33433, 0.33534,

0.33634, 0.33734, 0.33834, 0.33934, 0.34034, 0.34134, 0.34234, 0.34334, 0.34434, 0.34535, 0.34635, 0.34735, 0.34835, 0.34935, 0.35035, 0.35135, 0.35235, 0.35335, 0.35435, 0.35536, 0.35636, 0.35736, 0.35836, 0.35936,

0.36036, 0.36136, 0.36236, 0.36336, 0.36436, 0.36537, 0.36637, 0.36737, 0.36837, 0.36937, 0.37037, 0.37137, 0.37237, 0.37337, 0.37437, 0.37538, 0.37638, 0.37738, 0.37838, 0.37938, 0.38038, 0.38138, 0.38238, 0.38338,

0.38438, 0.38539, 0.38639, 0.38739, 0.38839, 0.38939, 0.39039, 0.39139, 0.39239, 0.39339, 0.39439, 0.3954, 0.3964, 0.3974, 0.3984, 0.3994, 0.4004, 0.4014, 0.4024, 0.4034, 0.4044, 0.40541, 0.40641, 0.40741,

0.40841, 0.40941, 0.41041, 0.41141, 0.41241, 0.41341, 0.41441, 0.41542, 0.41642, 0.41742, 0.41842, 0.41942, 0.42042, 0.42142, 0.42242, 0.42342, 0.42442, 0.42543, 0.42643, 0.42743, 0.42843, 0.42943, 0.43043, 0.43143,

0.43243, 0.43343, 0.43443, 0.43544, 0.43644, 0.43744, 0.43844, 0.43944, 0.44044, 0.44144, 0.44244, 0.44344, 0.44444, 0.44545, 0.44645, 0.44745, 0.44845, 0.44945, 0.45045, 0.45145, 0.45245, 0.45345, 0.45445, 0.45546,

0.45646, 0.45746, 0.45846, 0.45946, 0.46046, 0.46146, 0.46246, 0.46346, 0.46446, 0.46547, 0.46647, 0.46747, 0.46847, 0.46947, 0.47047, 0.47147, 0.47247, 0.47347, 0.47447, 0.47548, 0.47648, 0.47748, 0.47848, 0.47948,

0.48048, 0.48148, 0.48248, 0.48348, 0.48448, 0.48549, 0.48649, 0.48749, 0.48849, 0.48949, 0.49049, 0.49149, 0.49249, 0.49349, 0.49449, 0.4955, 0.4965, 0.4975, 0.4985, 0.4995, 0.5005, 0.5015, 0.5025, 0.5035,

0.5045, 0.50551, 0.50651, 0.50751, 0.50851, 0.50951, 0.51051, 0.51151, 0.51251, 0.51351, 0.51451, 0.51552, 0.51652, 0.51752, 0.51852, 0.51952, 0.52052, 0.52152, 0.52252, 0.52352, 0.52452, 0.52553, 0.52653, 0.52753,

0.52853, 0.52953, 0.53053, 0.53153, 0.53253, 0.53353, 0.53453, 0.53554, 0.53654, 0.53754, 0.53854, 0.53954, 0.54054, 0.54154, 0.54254, 0.54354, 0.54454, 0.54555, 0.54655, 0.54755, 0.54855, 0.54955, 0.55055, 0.55155,

0.55255, 0.55355, 0.55455, 0.55556, 0.55656, 0.55756, 0.55856, 0.55956, 0.56056, 0.56156, 0.56256, 0.56356, 0.56456, 0.56557, 0.56657, 0.56757, 0.56857, 0.56957, 0.57057, 0.57157, 0.57257, 0.57357, 0.57457, 0.57558,

0.57658, 0.57758, 0.57858, 0.57958, 0.58058, 0.58158, 0.58258, 0.58358, 0.58458, 0.58559, 0.58659, 0.58759, 0.58859, 0.58959, 0.59059, 0.59159, 0.59259, 0.59359, 0.59459, 0.5956, 0.5966, 0.5976, 0.5986, 0.5996,

0.6006, 0.6016, 0.6026, 0.6036, 0.6046, 0.60561, 0.60661, 0.60761, 0.60861, 0.60961, 0.61061, 0.61161, 0.61261, 0.61361, 0.61461, 0.61562, 0.61662, 0.61762, 0.61862, 0.61962, 0.62062, 0.62162, 0.62262, 0.62362,

0.62462, 0.62563, 0.62663, 0.62763, 0.62863, 0.62963, 0.63063, 0.63163, 0.63263, 0.63363, 0.63463, 0.63564, 0.63664, 0.63764, 0.63864, 0.63964, 0.64064, 0.64164, 0.64264, 0.64364, 0.64464, 0.64565, 0.64665, 0.64765,

0.64865, 0.64965, 0.65065, 0.65165, 0.65265, 0.65365, 0.65465, 0.65566, 0.65666, 0.65766, 0.65866, 0.65966, 0.66066, 0.66166, 0.66266, 0.66366, 0.66466, 0.66567, 0.66667, 0.66767, 0.66867, 0.66967, 0.67067, 0.67167,

0.67267, 0.67367, 0.67467, 0.67568, 0.67668, 0.67768, 0.67868, 0.67968, 0.68068, 0.68168, 0.68268, 0.68368, 0.68468, 0.68569, 0.68669, 0.68769, 0.68869, 0.68969, 0.69069, 0.69169, 0.69269, 0.69369, 0.69469, 0.6957,

0.6967, 0.6977, 0.6987, 0.6997, 0.7007, 0.7017, 0.7027, 0.7037, 0.7047, 0.70571, 0.70671, 0.70771, 0.70871, 0.70971, 0.71071, 0.71171, 0.71271, 0.71371, 0.71471, 0.71572, 0.71672, 0.71772, 0.71872, 0.71972,

0.72072, 0.72172, 0.72272, 0.72372, 0.72472, 0.72573, 0.72673, 0.72773, 0.72873, 0.72973, 0.73073, 0.73173, 0.73273, 0.73373, 0.73473, 0.73574, 0.73674, 0.73774, 0.73874, 0.73974, 0.74074, 0.74174, 0.74274, 0.74374,

0.74474, 0.74575, 0.74675, 0.74775, 0.74875, 0.74975, 0.75075, 0.75175, 0.75275, 0.75375, 0.75475, 0.75576, 0.75676, 0.75776, 0.75876, 0.75976, 0.76076, 0.76176, 0.76276, 0.76376, 0.76476, 0.76577, 0.76677, 0.76777,

0.76877, 0.76977, 0.77077, 0.77177, 0.77277, 0.77377, 0.77477, 0.77578, 0.77678, 0.77778, 0.77878, 0.77978, 0.78078, 0.78178, 0.78278, 0.78378, 0.78478, 0.78579, 0.78679, 0.78779, 0.78879, 0.78979, 0.79079, 0.79179,

0.79279, 0.79379, 0.79479, 0.7958, 0.7968, 0.7978, 0.7988, 0.7998, 0.8008, 0.8018, 0.8028, 0.8038, 0.8048, 0.80581, 0.80681, 0.80781, 0.80881, 0.80981, 0.81081, 0.81181, 0.81281, 0.81381, 0.81481, 0.81582,

0.81682, 0.81782, 0.81882, 0.81982, 0.82082, 0.82182, 0.82282, 0.82382, 0.82482, 0.82583, 0.82683, 0.82783, 0.82883, 0.82983, 0.83083, 0.83183, 0.83283, 0.83383, 0.83483, 0.83584, 0.83684, 0.83784, 0.83884, 0.83984,

0.84084, 0.84184, 0.84284, 0.84384, 0.84484, 0.84585, 0.84685, 0.84785, 0.84885, 0.84985, 0.85085, 0.85185, 0.85285, 0.85385, 0.85485, 0.85586, 0.85686, 0.85786, 0.85886, 0.85986, 0.86086, 0.86186, 0.86286, 0.86386,

0.86486, 0.86587, 0.86687, 0.86787, 0.86887, 0.86987, 0.87087, 0.87187, 0.87287, 0.87387, 0.87487, 0.87588, 0.87688, 0.87788, 0.87888, 0.87988, 0.88088, 0.88188, 0.88288, 0.88388, 0.88488, 0.88589, 0.88689, 0.88789,

0.88889, 0.88989, 0.89089, 0.89189, 0.89289, 0.89389, 0.89489, 0.8959, 0.8969, 0.8979, 0.8989, 0.8999, 0.9009, 0.9019, 0.9029, 0.9039, 0.9049, 0.90591, 0.90691, 0.90791, 0.90891, 0.90991, 0.91091, 0.91191,

0.91291, 0.91391, 0.91491, 0.91592, 0.91692, 0.91792, 0.91892, 0.91992, 0.92092, 0.92192, 0.92292, 0.92392, 0.92492, 0.92593, 0.92693, 0.92793, 0.92893, 0.92993, 0.93093, 0.93193, 0.93293, 0.93393, 0.93493, 0.93594,

0.93694, 0.93794, 0.93894, 0.93994, 0.94094, 0.94194, 0.94294, 0.94394, 0.94494, 0.94595, 0.94695, 0.94795, 0.94895, 0.94995, 0.95095, 0.95195, 0.95295, 0.95395, 0.95495, 0.95596, 0.95696, 0.95796, 0.95896, 0.95996,

0.96096, 0.96196, 0.96296, 0.96396, 0.96496, 0.96597, 0.96697, 0.96797, 0.96897, 0.96997, 0.97097, 0.97197, 0.97297, 0.97397, 0.97497, 0.97598, 0.97698, 0.97798, 0.97898, 0.97998, 0.98098, 0.98198, 0.98298, 0.98398,

0.98498, 0.98599, 0.98699, 0.98799, 0.98899, 0.98999, 0.99099, 0.99199, 0.99299, 0.99399, 0.99499, 0.996, 0.997, 0.998, 0.999, 1]), array([[ 0.99562, 0.99562, 0.99344, 0.99344, 0.99344, 0.99125, 0.98687, 0.98468, 0.98031, 0.97374, 0.97374, 0.97374, 0.97374, 0.97374, 0.97374, 0.97155, 0.97155, 0.96937, 0.96937, 0.96937, 0.96718, 0.96718, 0.96499,

0.96499, 0.96499, 0.9628, 0.96061, 0.96061, 0.96061, 0.96061, 0.96061, 0.96061, 0.96061, 0.95842, 0.95842, 0.95842, 0.95842, 0.95842, 0.95624, 0.95624, 0.95624, 0.95624, 0.95624, 0.95624, 0.95405, 0.95405,

0.95405, 0.95405, 0.95405, 0.95405, 0.95405, 0.95405, 0.95186, 0.94967, 0.94967, 0.94967, 0.94967, 0.94748, 0.9453, 0.9453, 0.9453, 0.9453, 0.9453, 0.94311, 0.94311, 0.94311, 0.94092, 0.94092, 0.93873,

0.93654, 0.93435, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.93217, 0.92998, 0.92998, 0.92998, 0.92998, 0.92998, 0.92998, 0.92998, 0.92998,

0.92998, 0.92998, 0.92998, 0.92931, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.92779, 0.9256, 0.9256, 0.9256, 0.92341, 0.92341, 0.92341, 0.92341,

0.92341, 0.92341, 0.92248, 0.92123, 0.92123, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91904, 0.91685, 0.91685, 0.91685, 0.91685, 0.91685,